AI Pricing: AI as extension or platform

Steven Forth is a Managing Partner at Ibbaka. See his Skill Profile on Ibbaka Talio.

SaaS companies have been frantically at work over the past year adding AI to their solutions, prompted in part by the dramatic success of Open.ai’s ChatGPT and ChatGPT Pro and of Microsoft 360’s Copilot.

Add functionality into an existing application (that is what Squarespace, the platform I am authoring on has done)

Add a brand new AI-based offer

Add AI as an extension to the platform with an extension packaging pattern

We have discussed the logic for each of these approaches in A simple framework for generative AI pricing strategy.

Recently, we have seen something new and important. Companies that had begun by creating an AI module as an extension to their current platform are inverting this and making AI the platform and the existing platform the extension.

This is one of the eight actions one can take using a modular operator.

Note: The original idea for modular operators comes from Design Rules: The Power of Modularity by Carliss Baldwin and Kim Clark.

This is an application of the Invert operator, where in a way the parent becomes the child and the child the parent. This is one of the most radical packaging steps one can take. Why are people considering this?

For the companies we are speaking to, there are 3 main reasons.

Customers are asking for this, they want the power of AI and they want it across all of the business processes supported.

The value drivers for AI are general and apply across all of the functionality; AI is not specific to just one piece of functionality.

Costs for AI are significant and are better managed when incorporated into the platform and tracked at that level.

This sort of inversion happens only with fundamental change when a new paradigm takes over and re-frames everything on its own terms. AI is such a paradigm shift.

Customers are asking for this

People are buying the AI hype. Most buyers have experience with ChatGPT and many have subscribed to the pro version and accepted the price point of $20 per user per month (See Market acceptance of ChatGPT Pro pricing).

They are asking …

“If AI is so powerful and so relevant to all of our business processes, why is it being carved out into a separate module and sold at a premium? Why isn’t AI the platform that everything else is built on?”

“I can’t afford to pay extra money for every user on every application. This is starting to add up and get out of control. I am going to be selective about what AI modules I pay for.”

“I want to see the results from adding in AI. Will it really make by business that much better? How exactly? Can I pay once I have seen the results?”

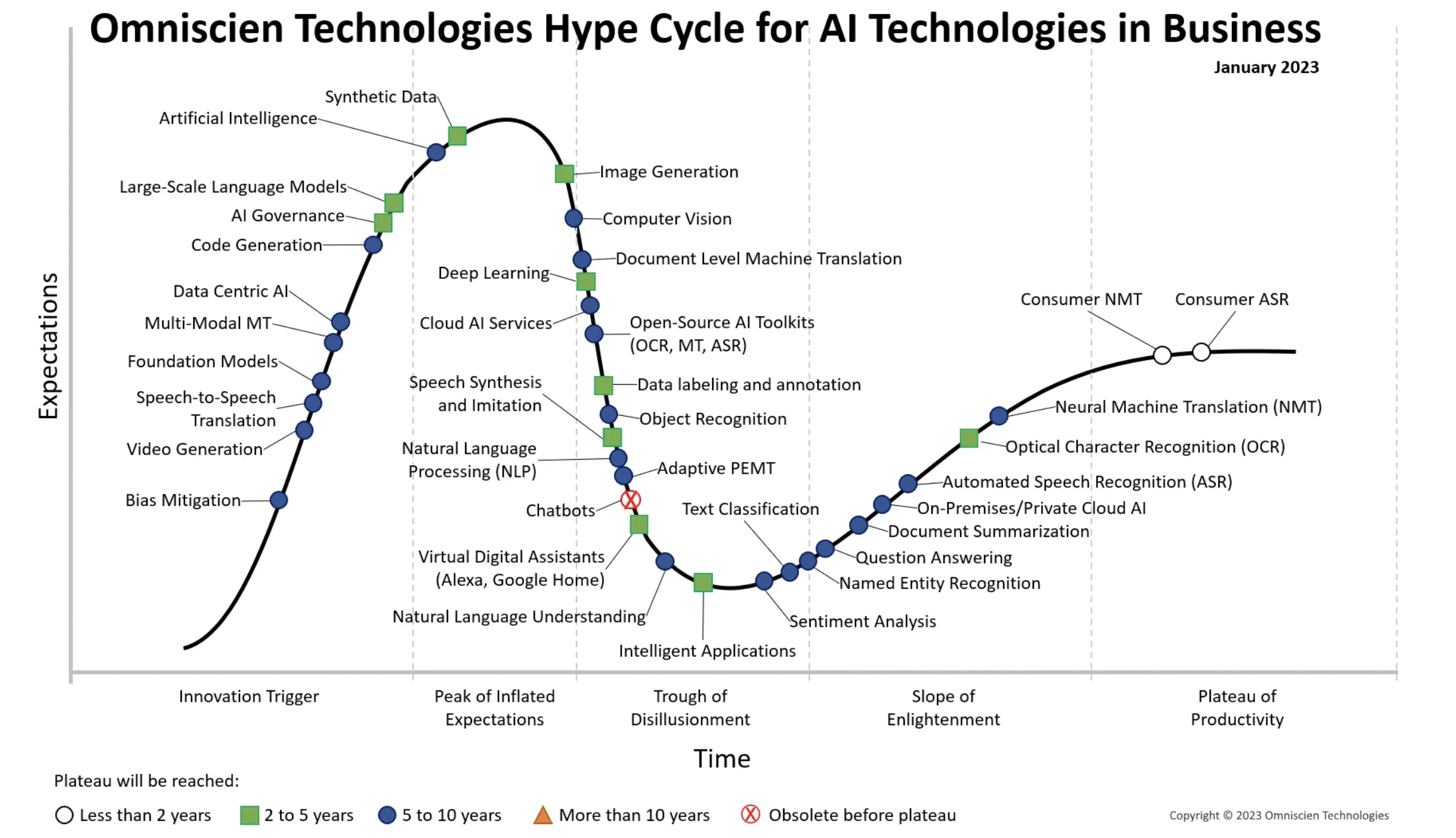

Some people think we are getting ahead of ourselves and that Generative AI is still early in the hype cycle. Here is the Omnisicen hype cycle for AI.

The interesting thing here from a pricing perspective is that, according to Omniscien, Virtual Digital Assistants and Intelligent Applications will soon be through the ‘trough of disillusionment’ and will start their climb up the ‘slope of enlightenment.’ In other words, they are getting real. There are opportunities for value-based pricing here.

The value drivers for AI are general

Another reason to invert the architecture and make AI the platform is that the capabilities introduced by generative AI are general and can be used in many different applications. Each module can feed data down into the model and cross-connecting data from different sources can accelerate model evolution. The model is general, the specific predictions and questions the model answers can be module/application/use case specific.

Costs for AI are significant

AI is not cheap. It is expensive to build, evolve, and maintain models, and each time they are used there are costs. It will be easier to manage these costs if they are all in one place. Having multiple models scattered across different services will make operations optimization a lot more difficult. We are going back to when streamlining and finding computing efficiencies matters. In many cases, it will be easier to do this with the AI as a platform.

Why and why not?

So if you currently offer your advanced AI functionality as a module, either an independent module or as an extension to your platform, should you make the AI the platform? Well, it depends.

Decisions to make

If you have decided to move to AI as the platform there are a number of follow on pricing decisions.

You will probably want to have a hybrid pricing model, combining subscriptions with some form of variable pricing. This design is supported by research from everyone from Zuora, Maxio, Pendo-OpenView to the recent NRR survey by PeakSpan and Ibbaka.

So one question is whether you put the variable pricing at the module or platform level.

It goes at the platform level when there are common functions, provided by the AI, that deliver value across all modules dependent on usage or scale.

If the scaling or usage value is different for each module then the variable pricing goes as the module level.

Could you have both?

Yes, but the introduces a lot of complexity and there can be weird interactions. It is harder to explain to customers and is often seen as double counting. Keep your pricing design as simple as possible (but no simpler as Einstein is said to have said).

A second question is how much of the price should be from

The platform subscription

The module subscription

Variable pricing

Below are 3 options shown across 3 scenarios.

Under normal use, each balancing generates the same revenue. Under high use, you win by weighting towards usage, but of course, under low use revenue will go down.

How to approach balancing?

At Ibbaka, we consider 3 things.

Positioning in the market - What is the long-term product strategy and how important is the platform relative to the modules to that strategy?

Relative contribution to value - If one has a well-designed value model, one can work out which value drivers are delivered through the platform and which through specific modules; one can then adjust the balance to reflect the value.

Revenue predictability - In most cases, subscriptions are more predictable than use, so many people lean towards reliance on subscriptions. Once you have good baseline data, modern predictive analytics systems can generally make usage more predictable. Usage also tends to track value better.

In many cases, this balance will change over time as the relative value of the modules and platform changes (new functionality at each level, richer models, better understanding of usage and value). Pricing is adaptive.

All innovation becomes a commodity over time

One thing to remember is that over time general platforms become commoditized and disappear from sight. The best example of this is databases. We all use databases in just about all of our applications. We seldom pay for them directly and when we do the pricing is transactional on AWS or Azure and we are often relying on an open source alternative. This will happen to general AI platforms over the next 10 to 20 years. Once a new technology reaches the ‘plateau of productivity’ it becomes more and more commoditized and fades into the background.