AI pricing: Your next proposal will be evaluated by an AI

Steven Forth is CEO of Ibbaka. See his Skill Profile on Ibbaka Talio.

Over the past few months, we have noticed an interesting trend. More and more companies are using generative AI applications to evaluate proposals. This has many implications for B2B companies and will lead to changes in pricing practices.

The implications are different for Product Led Growth, Sales Led Growth and Service Led Growth (Service as Software) companies, but all B2B SaaS companies need to act now to address this.

Why are companies using Generative AI to evaluate alternatives?

It is not easy to search out all the information needed to compare solutions, and having done so to make comparisons between the different alternatives. In most cases, there are too many factors to consider and too much information to process.

As a result, decisions are based on relationships, a few compelling selling points, and case studies. Companies tend to go with the dominant vendor not because it offers the best solution, but because it is easiest to buy. We see this today in the generative AI market, where many companies default to Open.ai without considering the alternatives (Ibbaka’s approach is to run key prompts against at least two and usually three different models).

The decision-making process is also fraught. In most B2B sales, multiple stakeholders are involved, all with different agendas and the ability to influence the decision. This often results in faulty decision-making where the loudest voice wins.

Many people are thinking ‘there has to be a better way, and are trying out generative AI to get to more balanced and objective proposal reviews. The final decision still rests with the people, but it is informed by an AI.

How is Generative AI being used to evaluate proposals?

The direct approach, where one uploads a number of proposals to one’s preferred AI and asks for a comparison does not work well. The results can be inconsistent and hard to interpret. One needs to provide the AI with more context and direction.

In Ibbaka’s first pass at this, we first compared proposals to each other in a paired comparison and then took the top four and asked the AI to rank order them.

We found this gave a lot of insights and helped us make a decision, but we wanted to find ways to make the process better.

The first thing we did was to create a rubric for scoring the proposals across multiple dimensions. A rubric is a scoring guide that defines the different dimensions that something will be scored on and provides definitions, examples and a criteria as well as the range of possible scores.

These dimensions can change depending on the type of proposal, but here are some that we find valuable.

Relevant experience

Process clarity

Deliverables clarity

Timeline

Value

Economic

Other

Price

Value ratio (is the price commensurate with value)

Goal fit

Probability of goal attainment

Of course, one can use a generative AI to help to generate and evolve the rubric.

A simple architecture for using generative AI to evaluate proposals and pricing

Here is how the pieces fit together.

Use a generative AI to create the rubric (this should be an adaptive document, updated for each round)

Evaluate the proposals by using the rubric to provide context and uploading the rubric and the proposals to a generative AI

Try using more than one large language model (LLM) when you do this as the common models can give quite different results

Consider using ‘round robin’ comparisons where each proposal is compared with all of the other proposals, then compare all of the proposals at once

Go deeper and compare the proposals on each specific dimension in the rubric

Both rank order the proposals and ask the generative AI to make a recommendation

Make a choice (this is generally done by a group of people, we are talking human in the loop AI here)

Evaluate the outcome, the same or a modified rubric used for the proposal evaluation can be used (outcome evaluation using generative AI is its own large topic)

Use the outcome to update the rubric

What are the implications for Product Led Growth Companies?

Product Led Growth companies need to redesign their websites so that they are providing the information that the AI wants to use in the comparison. It should be easy for the AI to find the relevant information. What this information is will depend on the rubric being used by the buyer, so one way to tilt the field is to provide a rubric for buyers to use.

Build your own rubric, one that you think captures the buying and decision making process, and then compare your site with competitors.

Pages to focus on are generally

Descriptions of the solution

Descriptions of use cases and Jobs to be Done

Case studies

The pricing page

Descriptions of how you provide customer support and customer success

The value page or value calculator (as generative AI will not generally input data for you have a number of examples where sample data has already been entered)

What are the implications for Sales Led Growth Companies?

Evaluation of proposals using generative AI will have the most impact on sales led growth companies.

Again, a good tactic will be to offer a rubric to buyers early in the buying process. They may or may not use it, but it allows you to set the stage and shape thinking.

Make sure you test your proposal against the rubric. You may want to test several different versions of the proposal and compare the scores.

Pricing and value are going to be critical here, so make sure that they are baked into the proposal in a clear way. Using a value story as part of the proposal can help with this (see Value stories are the key to value based pricing success).

What are the implications for Service Led Growth Companies?

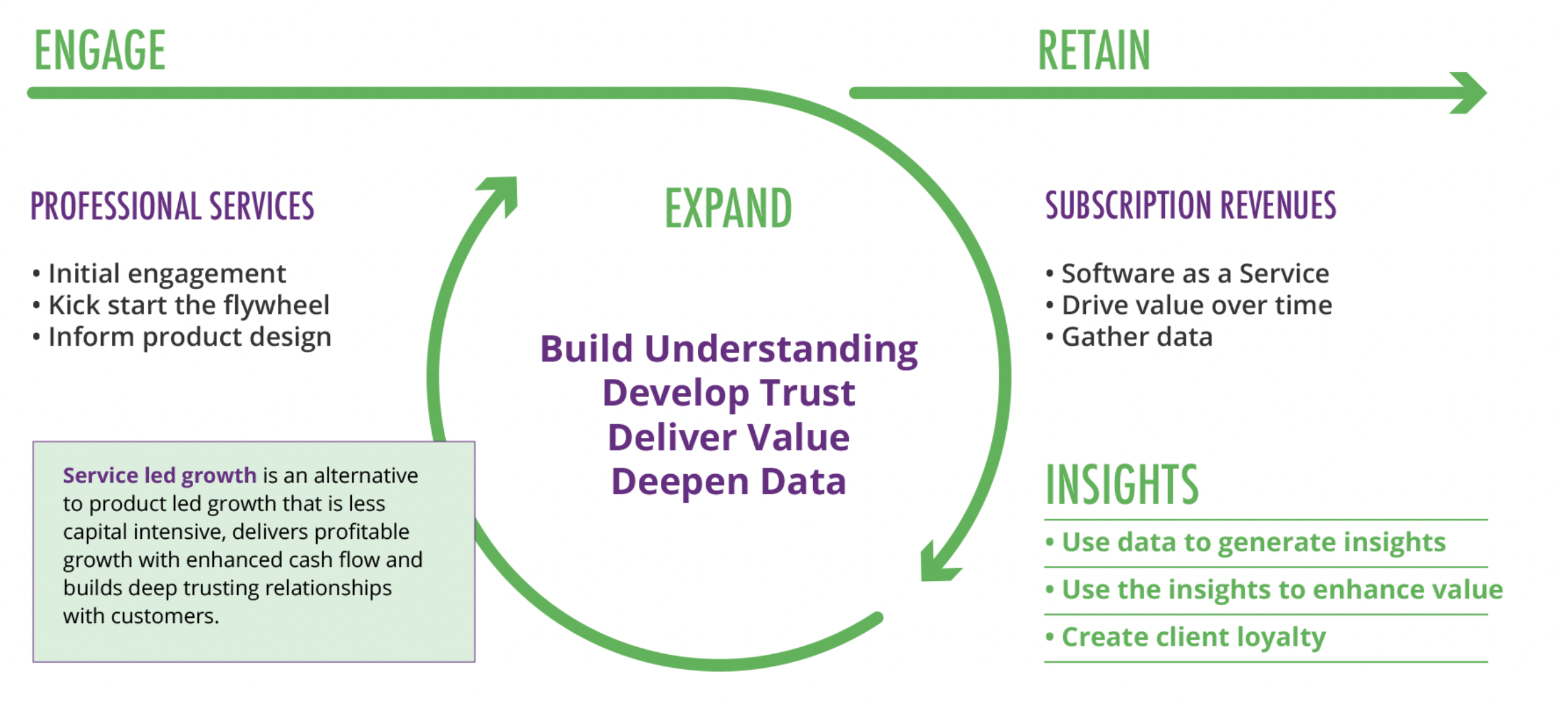

Service led growth companies provide a service through a software platform and then provide the software platform to their customers. This business model fits well with the emerging Service as Software or SaaS 2.0 meme that has become popular of late (see AI leads a Service as Software Paradigm Shift). Service led growth companies have to answer the same questions about experience, process, case studies, value, and pricing as other companies. The service as a software model becomes compelling when it combines all of the value of a custom service with the value of a scalable software platform. This needs to be built into the evaluation rubric.

How can you prepare?

Start using Generative AI to evaluate the proposals your own company receives, this will let you build experience in the overall approach and the development of rubrics

Develop a rubric for your own solutions and make it available to your customers, get their feedback, and make the rubric a shared document

Run the evaluation on past proposals and see if you can develop a rubric that will predict which proposals will win

Use the same approach to customer success, and help your customers answer questions about renewal, expansion, and if they are getting value from a solution