Hugging Face and pricing for community led growth

Steven Forth is a Managing Partner at Ibbaka. See his Skill Profile on Ibbaka Talio.

The big news in the AI pricing world last week was Microsoft’s pricing for Copilot. See Microsoft puts a price on AI. Ibbaka is doing a survey on digital assistant pricing that you may enjoy contributing to. That was the biggest news, but it was not the most important news.

Meta announced that it had released the new generation of its open language model Llama, Llama 2. Meta and Microsoft Introduce the Next Generation of Llama (Microsoft is playing a major role here as well). The Information thinks that Open.ai will need to respond to this move by Meta.

What is Llama (also written LLaMA)? Llama means Large Language Model Meta AI. It is Meta’s alternative to Open.ai’s GPT-4 or Google’s PaLM 2. Llama 2 comes in three sizes, 7 billion, 13 billion and 73 billion parameters. This is much smaller than GPT-4, so why should we care?

Llama 2 is available on Hugging Face as an open model.

Wait, ‘Hugging who’?

Hugging Face is emerging as one of the most important hubs for the transformer revolution in AI. It is where people go to find models and tools and to share their work. There are more than 266,000 models available in its repository. Content generation AI is about a lot more than GPT-4 or applications like Copilot.

Hugging Face positions itself as “The AI community building the future.” It is above all a place where like minded people can support each other.

Why would I want to build or modify a language model?

Many applications of transformer models require augmenting the standard models. They will need training on domain specific documents and problem sets. Even GPT-4 fails to generate meaningful content when one gets into technical areas or is trying to build specific solutions.

For example, Pricing for the Planet is developing something called PrimoGPT.

“Our cutting-edge AI solution, PrimoGPT, is revolutionizing the way businesses approach pricing and monetization. PrimoGPT uses GenAI and advanced algorithms to provide intelligent, data-driven recommendations on pricing and monetization strategies, inherently incorporating sustainability. It's not just about making profits; it's about doing so responsibly and sustainably. With PrimoGPT, you can achieve your business objectives while also contributing to a sustainable future.”

At Ibbaka, we are exploring the use of Generative AI to create value stories from value models, which means we need to train a model with both value models and value stories. At one point, we may even be able to derive a pricing model from a value model using AI (this will probably require the kind of hybrid approach that Stephen Wolfram has written about).

How is Hugging Face priced?

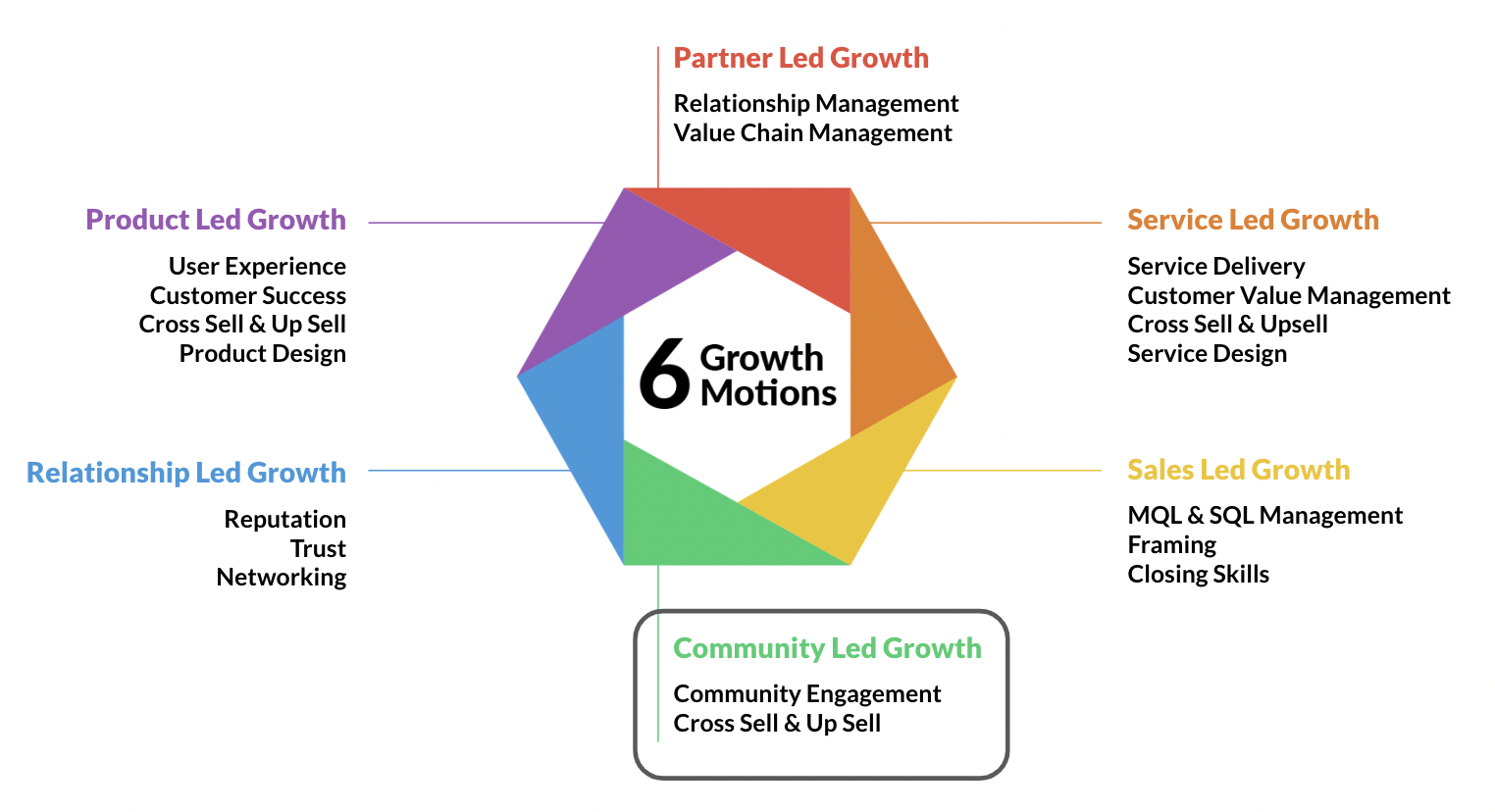

Hugging Face is an example of Community Led Growth. It is one of the six growth motions that we see in the SaaS market.

Community led growth works when there is a reason for community to exist and interact with each other. Hugging Face has achieved this. In addition to the 266,000+ models that have been shared, there are more than 42,000 datasets and 5,000 organizations onboard (including virtually all of the key AI companies).

Hugging Face pricing

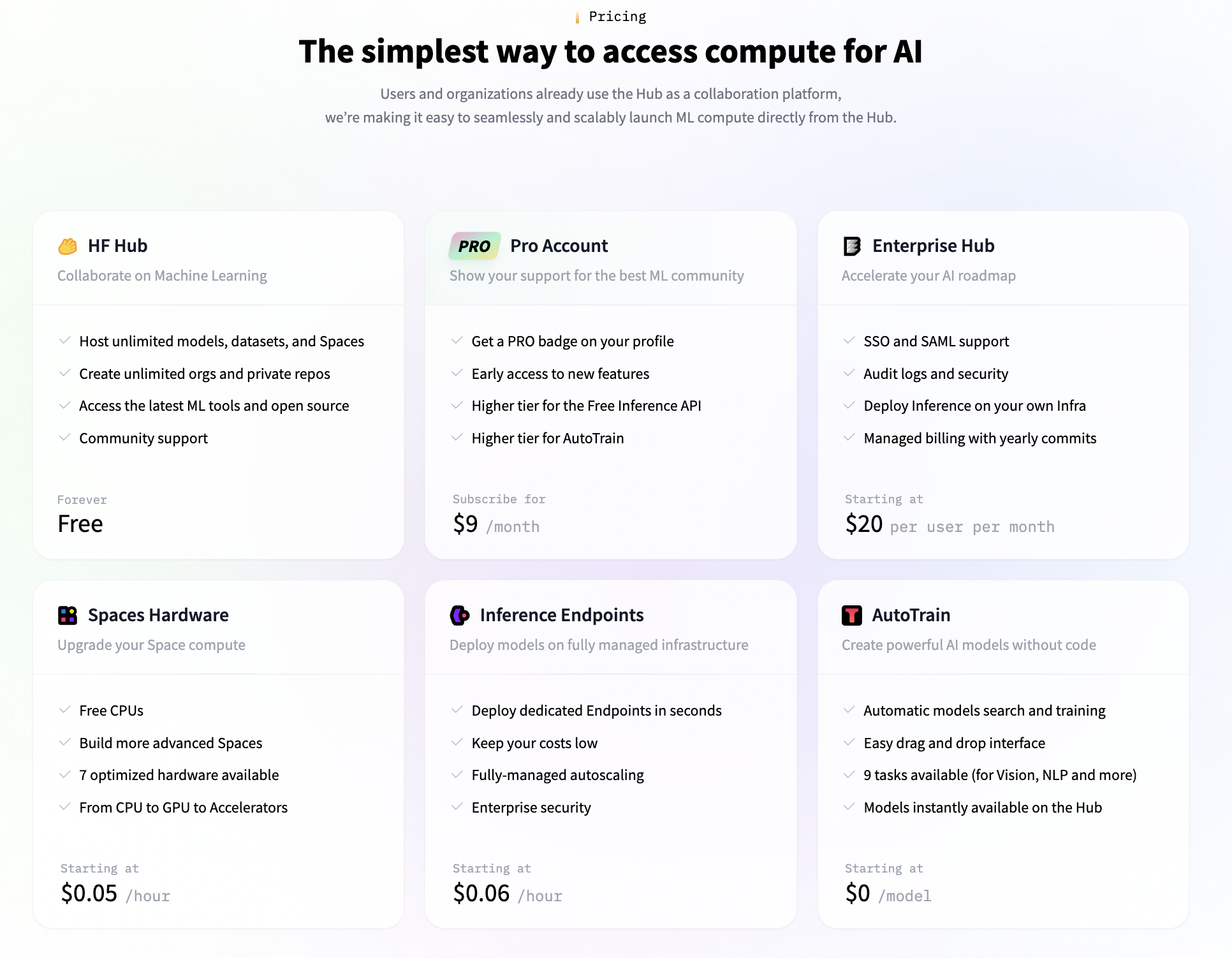

So how does all this get paid for? Hugging Face has a pricing page.

There are two pricing metrics here: ‘per user’ and ‘per hour.’

The per user metric applies to the Pro Account and to the Enterprise Hub. The Enterprise Hub is more than 2X the price of the Pro Account per person. I suspect that Hugging Face wants to get as many independent developers in as possible, knowing that these will drive both Enterprise Adoption and use. They can then charge more for people connected to an Enterprise Hub.

The Enterprise Hub has the expected fences around single sign on, audit trails and even the ability to deploy inference to one’s own infrastructure (which could be ones infrastructure on AWS or Azure I assume).

Spaces Hardware (which is where one creates the spaces where you build, train and use your models) and Inference Endpoints (where your applications will connect to leverage AIs you have on Hugging Face) use ‘per hour’ as a pricing metric.

This seems odd to me. Per hour is not a pricing metric one sees a lot for these use cases. Clicking in provides more detail, but not more insight into the motivation for this pricing metric.

You can get more details on endpoint pricing too.

I am glad to have the detail, but now I need to go over and compare this with our own AWS pricing and see what makes the most sense from a cost perspective. Note that the Nvidia architectures, which are much better at running transformers with their parallel attention heads, are priced more than 10X higher than Intel architectures. This helps explain why Nvidia has become such a valuable company.

The ‘per user’ pricing seems fair to me, but I am not excited about the ‘per hour’ pricing. There should be a better way to map price to use to value. This can be hard when there is such a diversity of use cases. I wonder if a model can be trained to help with this!