AI Pricing: Shaping the Future of Business Strategies

Liam Hannaford is the Marketing Manager at Ibbaka

Ibbaka does a lot of work on how to price innovation and one of the main drivers of innovation today is AI, especially Generative AI where large language models built on the transformer architecture are used to create new functionality and value.

Pricing is a critical factor that can make or break the success of a new innovation. As businesses strive to stay competitive and meet the demands of their customers they are investing in new new functionalities. This is a costly investment and the value created will have to be captured one way or another. AI pricing is a critical theme for all of us going forward.

Themes for AI Pricing

AI Pricing Studies on:

1. How to Price AI: A General Framework

AI will transform all parts of the software industry over the next few years. If ‘software is eating the world’ (Marc Andreessen) then AI is eating software. We need to develop a framework for pricing AI and understand how the value metrics and pricing metrics for AI will work. This is an exploration of this topic.

What does ChatGPT have to say about pricing AI? You can see the questions and responses right here.

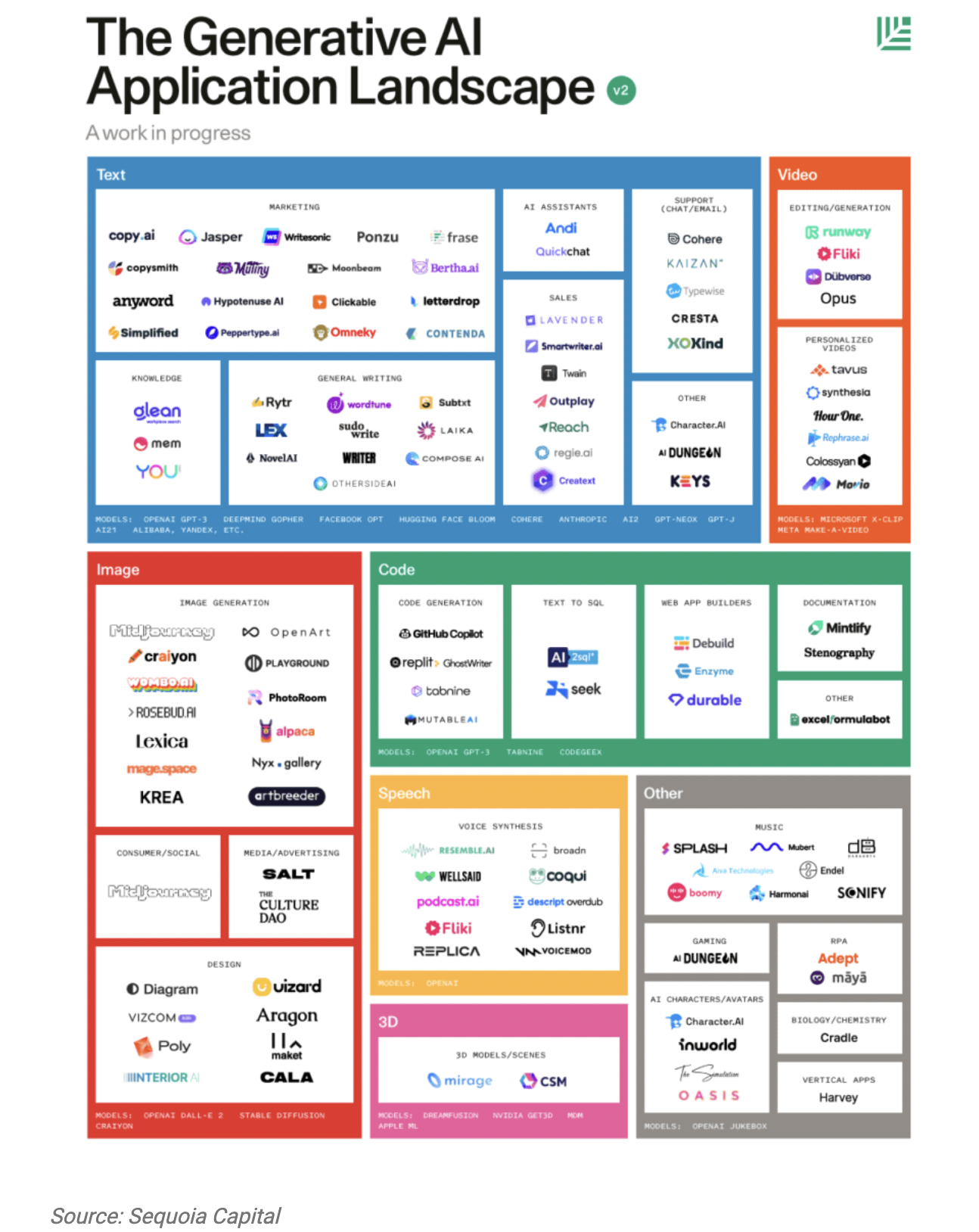

How can we organize AI applications?

Before we can talk about pricing AI applications, we need a way to organize the different types of applications. This is not an easy task as new applications appear every day and AI is being applied much more widely, and more quickly, than most of us expected.

Earlier this year, we proposed the parallel hypothesis:

If one can use AI to describe or categorize a piece of content, you can use AI to generate similar content.

This hypothesis is proving to be true, with new AI applications able to generate more and more types of content, from diagnosis and recommendations, to code and designs, to reports, dialogs, voices, music, and many forms of art.

There are 5 main types of AI applications:

Classification

Pattern Recognition

Recommendation

Prediction

Generation

The value metrics (and therefore, the pricing metrics) will be different for each of these application areas.

1. Classification

Classification AI applications involve categorizing data into distinct classes or groups based on predefined criteria. From email spam filters to sentiment analysis in social media, classification AI plays a crucial role in sorting vast amounts of data, enabling businesses to make informed decisions and streamline their operations.

2. Pattern Recognition

Pattern recognition AI focuses on identifying recurring patterns or trends within datasets. This application is commonly used in image recognition, speech recognition, and natural language processing. Pattern recognition AI powers technologies like facial recognition and voice assistants, revolutionizing user experiences and enhancing security measures.

3. Recommendation

AI-powered recommendation systems analyze user behavior and preferences to offer personalized suggestions. Popularly employed in e-commerce platforms and content streaming services, recommendation AI enhances customer engagement and increases sales by providing users with tailored content and product recommendations.

4. Prediction

AI applications in prediction aim to forecast future outcomes based on historical data patterns. Predictive analytics is widely used in various industries, including finance, healthcare, and marketing, to optimize decision-making and anticipate market trends.

5. Generation

Generation AI applications are at the forefront of the parallel hypothesis, as they focus on producing new content autonomously. This includes AI-generated artwork, music compositions, creative writing, and even software code. As generation AI evolves, it holds immense potential in transforming industries and creative pursuits.

Tailoring Value and Pricing Metrics

The diversity of AI applications necessitates tailoring value and pricing metrics to each specific area. Businesses must understand the unique benefits AI brings to their operations and the impact it has on their customers to set appropriate pricing strategies.

For instance, the value derived from a recommendation AI system may be measured by increased customer retention and revenue growth, while the value of generation AI may be evaluated based on creative output and efficiency gains.

The AI Ecology

AI applications are created using a complex set of technologies and resources. One way to organize these is shown below. Different parts of this ecology deliver value, and are therefore priced, in different ways.

Infrastructure: AI development, management, and the application runs on a complex of custom chips (from foundries like Nvidia), servers, databases, data pipes, and all the software required for the management of complex IT infrastructure. Some large companies develop and manage their own infrastructure, but most rely on cloud vendors for this. Note that in the above sketch, ‘training’ means training a model and developing a model from a set of data.

Data: AI’s, especially machine learning AI’s, cannot be built without data, lots of data. Data that can be used to build AIs is becoming more and more valuable. Organizations that hold such data are looking for ways to monetize it, whether directly or by providing it to AI companies.

Tools: Programming languages like Python, Lisp, and Julia; frameworks like Tensorflow or Pytorch; and APIs like Keras. This is just the tip of the iceberg. GitHub has thousands of tools, and the big AI shops have their own custom versions.

Workflows: With all that data being run through so many different tools, there is a need for orchestration systems that control how data flows through the many different tools. Workflow systems are also used to manage the models generated.

Models: Models are developed from data using tools. An AI is basically a model, static or dynamic, that takes some form of input and generates an output.

Outputs: At the end of the day, we are not looking for models, but the outputs of the model. For many AI applications, it is the outputs that will be priced.

Outcomes: AI outputs are meant to have an impact on the world. The type of impact will depend on the application. An AI that is used to identify and diagnose tumors on an image will impact cancer survival. An image generated by an AI might be packaged as an NFT (Non-Fungible Token) and the outcome could be the price at which it is sold. Pricing outcomes are the future of pricing and will be enabled by ongoing advances.

Pricing Components of the AI Ecology

How we price AI depends on the role played in the ecology.

Basic pricing methods apply with the goal being to understand the value metric and to find pricing metrics that track the value metric.

Value Driver: An equation quantifying one aspect of how a solution delivers value to a specific customer or narrowly defined customer segment.

Value Metric: The unit of consumption by which a user gets value.

Pricing Metric: The unit of consumption for which a buyer pays.

Here is a brief guide to the different approaches to pricing seen in different parts of the AI ecology.

For the next couple of years, the most common use of AI will be to enhance existing applications. This is an example of sustaining innovation. There will also be cases of disruptive innovation and even cases of category creation. Ibbaka’s approach was shared in Pricing innovation and value drivers.

How can we price the different parts of the AI ecology?

Infrastructure: This is the most developed part of the ecology. Companies like Amazon AWS, Google, IBM, and Microsoft Azure are pricing their AI infrastructure services the way they are pricing their other infrastructure. One pays for servers, databases, the volume of data stored, throughput, and latency. Looking into the details of the pricing plans can give a lot of insight into the strategy of each company.

Data: Generally data will be priced based on the number of records, the quality of the records, and the relevance of the records. Quality and relevance will differ by application, so it is probable that the same data will be sold at different prices for different applications. This will be easier to do if approaches like Open Algorithms (OPAL) from the MIT Connection Lab are applied. In this model, the data stays put and the algorithm comes to the data. If this becomes a common approach, the complexity and value of the algorithm may be used to set the value of the data the algorithm is being applied to.

Tools: Today most of the best tools are open source. That is likely to change though and some companies will want to find ways to monetize their tools directly. The most likely pricing metric here will be ‘per user.’ More sophisticated companies will price on the outputs of the tools. There are likely to be free versions of each tool, for you to try, and then Good Better Best packaging with a combination of the number of outputs and functionality available used as pricing metrics and fences between packages.

Workflows: Applications for managing AI workflows are not all that different from those used to manage any data workflow. This is an application of mature technology to a new space. Pricing metrics will likely be the same as those for other data management workflows: process steps, the number of times the process is invoked, throughput, the number of applications connected, and in advanced applications network measures based on nodes and edges.

Models: This is where it starts to get interesting. All of the above, from infrastructure to data and tools to workflows, are meant to generate models. Many AI companies charge for the use of their model. This is what OpenAI does to price the GPT model.

In this case, OpenAI prices are input into the model (tokens) and then fences the models using speed and power. This is an example of usage-based pricing.

Outputs: Models have outputs. OpenAI takes this approach to its image generation model Dall-E.

Here one pays a higher amount per image based on image resolution.

For many applications, paying for the output of a model will make the most sense. One could pay per item classified, for pattern found, recommendation, or prediction made.

Outcomes: The future of pricing is to price outcomes. There have been whole books written about this, see The Ends Game: How Smart Companies Stop Selling Products and Start Delivering Value by Marco Bertini and Oded Koenigsberg. In the long run, this is how the outputs of AI will be priced, and the AI will help to address the current objections to outcome-based pricing.

There are too many causes contributing to any significant outcome

Outcomes are too unpredictable to price

AI’s can be trained to apply approaches like Judea Pearl’s causal reasoning to estimate the causal contribution of different factors (this is already routinely done in Health Economics and Outcomes Research). And prediction is one of the core use cases of modern AI and can be used to predict the outcomes of any intervention or solution (in this way AI’s will eventually replace value models and value based pricing).

Research into How to Price AI

At Ibbaka, we plan to develop this framework for pricing AI in 3 ways:

Work with clients to apply it to their pricing challenges.

Ibbaka is focused on developing and implementing pricing models for B2B SaaS. As much of the innovation over the next few years will come from applications of artificial intelligence, we expect to do a lot of work in this area. Key SaaS verticals like Cybersecurity, Marketing Automation, and Financial Management are all actively incorporating AI into their solutions.Research and compare AI pricing as it appears in the market.

For example, a great deal can be learned by comparing how Amazon Web Services, Google Vertex AI, MS Azure, or IBM Watson are priced. We will also be watching to see how Open.ai, Stability AI, and other innovators approach pricing.Develop general value models for AI-based applications and services.

At this point in time, the best practice is to base pricing on the variables used in value models. By developing some general value models for AI we will be able to explore how value is being created in the market.

2. The Ethics of Pricing AI

AI will find many uses in B2B pricing over the next few years. They will be used to build out value models, infer pricing models from these value models, set prices, configure complex solutions for value optimization (value optimization coming before price optimization), and so on. AI will also find applications in pricing research. Generative AIs like OpenAI’s GPT or Google’s Bard will be used to answer pricing research questions and will be leveraged to improve the design of pricing research.

Many of us have already taken the results of pricing research, whether this be market studies, financial and usage data, or conjoint analysis, and fed it into an LLM (Large Language Model) to get help in interpreting the data.

The next step is to augment and tune an LLM, perhaps an open-source one like LLaMA from Meta, with pricing data of various types to improve the quality of the conversation and maybe even the causal inference (for those wanting to go deep, this Microsoft Research paper is a fascinating read).

Of course, AI is nothing new to the pricing world. The heavy metal pricing optimization companies have been using machine learning for many years in their revenue and price optimization engines. And one of these criticisms of these systems is a lack of transparency in their pricing recommendations. How far should sales, or a buyer, trust a pricing recommendation from an AI that is a black box and is difficult to understand or even to predict?

Pricing AI is not immune to the questions and doubts around ethics and ethical use that have troubled other uses of AI.

Earlier in May, we asked the following on LinkedIn:

“What are the most important ethical questions when it comes to applying AI to pricing?

You can see the detailed comments here.

Transparency in Pricing and Pricing AIs

Back in March 2021, Ibbaka hosted a conversation between three pricing leaders on pricing transparency. Xiaohe Li, Stella Penso, and Kyle Westra went deep on what pricing transparency means. We summarized their conclusions in the below table.

What seems relevant to the ethics of pricing AI conversation are the two points on the pricing process.

“Pricing methods are understood.”

“Pricing algorithms are explained.”

There is a risk that as more and more B2B pricing is generated by AI, it will become less and less transparent. We will not know how the price was set and will not be able to explain the algorithms used to set the price.

Another way of saying transparency in the context of AI is explainable AI or xAI. The NIST has proposed four principles for xAI that will be relevant to the use of AI in pricing.

Explanation: A system delivers or contains accompanying evidence or reason(s) for outputs and/or processes.

Meaningful: A system provides explanations that are understandable to the intended consumer(s).

Explanation Accuracy: An explanation correctly reflects the reason for generating the output and/or accurately reflects the system’s process.

Knowledge Limits: A system only operates under the conditions for which it was designed and when it reaches sufficient confidence in its output.

All 4 of these principles can and should be applied to pricing AI.

Another perspective on the ethics of pricing AI comes from the (frightening) world of autonomous weapons. Here the constructs of human-in-the-loop, on-the-loop, and outside-of-the-loop are used to define levels of human control. and involvement.

human-in-the-loop: a human must instigate the action of the weapon (in other words not fully autonomous)

human-on-the-loop: a human may abort an action

human-out-of-the-loop: no human action is involved

These approaches are relevant to pricing. Most current systems are either human-in-the-loop or human-on-the-loop, which gives the human doing the pricing a level of control, and with control accountability. This is not always the case though. Automated trading systems, for example, are human-out-of-the-loop in many cases, and move too fast for human intervention. As we move to more and more M2M (Machine to Machine) business models we are going to see more use of pricing AIs with humans out of the loop. This should give us pause.

Pricing leaders need to be leading the discussion of pricing transparency and control. If we fail to do this we are inviting accusations that we are poor stewards of the pricing discipline and that we have lost control over and understanding of the fundamentals of our discipline.

Pricing Fairness and Pricing AI’s

At the end of the day, the goal of pricing transparency and AI is pricing fairness. The reason we are concerned with bias is that it puts fairness in question. AI’s developed using machine learning are notorious for being biased because of the data sets used to train them or to tag the data (in supervised learning). But what do we mean when we say ‘fairness in the context of pricing?’.

Back in 2018, Ibbaka proposed three principles of pricing fairness that we use in working with our clients.

Is it clear how we set prices? Can we explain this to ourselves? Can we explain this to our customers? Is our website clear on this? Can sales communicate it?

Is our pricing consistent? Do we treat similar customers in the same way? Is our discounting policy clear and consistent?

Are we creating differentiated value? Do we use this to set prices? Do we adjust prices based on the value we are creating? Are we sharing that value with our customers?

It seems to me that these can be connected to fairness in pricing AI. The first principle speaks to the need for transparency. Without transparency, we will struggle to have ethical pricing AIs.

Principle two needs to be strengthened, for as stated it is not strong enough to deal with the bias risk in AI.

The third principle, concerning the differentiated value and how value is shared, still seems sound. In fact, we should be able to develop pricing AIs that help us get better at understanding value and then working through the full cycle of creating, communicating, delivering, documenting, and then capturing part of that value back in price.

3. Pricing AI Content Generation

There has been a lot of investment into content generation AI, and in the summer of 2022, there was an explosion in applications and interest. With interest came use. With use came a rapid improvement in quality. Now people have started to ask ‘How can these things make money?’

Some people have been surprised that deep learning AIs are so good at content generation, but this is part of their underlying logic. An example of this is GANs or Generative Adversarial Networks. A GAN is two AIs that compete with each other, one to generate content and one to tell ‘real’ content from ‘fake’ content.

The Generator generates content, its job is to fool another AI whose job it is to discriminate real images from images created by the Generator, this second AI is the Discriminator. In a GAN, the Generator and Discriminator compete with each other and both get better.

So one could have as a ‘real image’ something like ‘paintings of fruit by Cézanne’ and challenge the Generator to create images that would fool the Discriminator. If you haven’t yet played with Dall-E, give it a try (the name is supposed to make you think of the artist Salvador Dali and the robot WALL-E from the Pixar movie).

The top are the originals, the bottom are generated by AI.

AI content generation already exists for:

Text - everything from poetry and novels to opinion pieces and technical reports

Translations - between languages and the creation of new languages

Images - what we will focus on here

Music - of many styles and emotional tones

Images for Music - static and dynamic

Music for Images

Music for Video

3D forms (using 3D printers for output)

This list will expand to anything where one can apply a GAN, and commercial applications of GANs are growing rapidly. They range from healthcare (X-ray analysis) to finance (fraud detection), to design (assumption testing). This is a rapidly developing field that is rewiring how we design software and conduct business.

Other companies in AI image generation include:

Growth of AI-generated content

Given this enormous growth, it is worth asking how value is being created, who it is being created for, and how that value could be monetized. Operating at this scale and delivering a great UX at scale costs money, a lot of money. According to Crunchbase, $1 billion has been invested in OpenAI with the lead investors being Microsoft, Khosla Ventures, and the Reid Hoffman Foundation. These investors will want a return at some point.

How OpenAI is pricing Dall-E

OpenAI and Dall-E do have a pricing model. They are monetizing both the API and image generation by users.

The standard pricing models for AI services come in 6 forms.

Resources - the cost of resources or infrastructure used to provide the service (deep learning AI’s are computationally expensive and run best on hardware and software architected for their specific performance requirements, this is one reason for the sharp increase in demand for Nvidia and other graphic chip makers)

Inputs - the data used for training, the number of training runs (providing data for deep learning is an emerging business in its own right); the amount of data that will be consumed by the AI once it is in production

Complexity of the model - number of layers, degree of back propagation, advanced models like Generative Adversarial Networks and so on

Outputs - number of models, number of applications of model (classifications, predictions, recommendations)

Workflows - number of workflows using the model, complexity of the workflows

Performance - accuracy of classifications, predictions, recommendations (i.e. do people act on the recommendations)

(Further reading: How would you price generic AI services.)

Dall-E is priced using an output pricing metric. One can embed Dall-E in an application and then one pays per image generated, with the price per image tied to image quality. Given the rate at which people publish images on these platforms that could generate significant revenue. See below for thoughts on who could get value from embedding Dall-E and how.

The underlying language models used in Dall-E and other OpenAI solutions are also available, in this case the pricing metric is tokens. A token translates words and a measure of complexity into a processing unit. This is an input metric.

Recently, OpenAI has started to gate this service so that one gets 50 free image generations in the first month and then 15 per month thereafter. If you want to generate more than this per month you will need to start paying. When this blog article was written (Nov. 5, 2022), the price was about US$0.13 per image. At 2 million images per day, if fully captured, that would be $260,000 per day or just under $95 million per year.

A marketplace for Image Generation AI prompts

Before exploring the value of AI content generation apps, it's worth noting another intriguing secondary trend - PromptBase, a marketplace facilitating the buying and selling of prompts for image generation, which, while not currently a lucrative endeavor, has the potential for significant growth, leading platforms to consider controlling such marketplaces if they become substantial revenue sources.

What is the Value of AI image generation?

Ibbaka is deeply rooted in value-based pricing. So before we ask about how to price or how much to charge we ask about value, let’s think about the following:

Who is value created for?

How is the value created?

How much value is created?

How else could the value be created?

How much does it cost to create and deliver the value?

How much does it cost the customer to get the value?

We are in the early days of category creation here. In category creation, the critical first step is to get people to a shared belief about how value is being created.

Given the early stage we are at with this category, OpenAI’s approach of giving people an API to embed in their own application and then to charge for outputs and resolution of the output makes a lot of sense. But this cannot be the endpoint. Eventually OpenAI, Stability.ai and their competitors will need to lead the conversation on value.

What are the Economic Value Drivers?

Conventionally, economic value drivers are organized into 6 categories:

Revenue

Cost

Operating capital

Capital investment

Risk

Optionality

(There are other ways to organize value drivers, see How to organize an economic value model for use in pricing design.)

Here are some early ideas on how the economic value drivers could play out. The relevant value driver categories are Revenue, Cost and Optionality.

Revenue

There are likely some direct ways to impact revenue. We know that images (and video) have an impact on both SEO and engagement. Dall-E could be set up to generate images to optimize SEO for specific search terms. It is fairly easy to get from this to a revenue value driver. One could go farther and start optimizing the images that drive conversion across the pipeline or that accelerate pipeline velocity. There are well developed value drivers for this as well.

There is also the opportunity to sell the images created and OpenAI could participate in that revenue.

Cost

Image generation is expensive. AI content generation could reduce costs by making artists and graphic designers more productive or in some cases by replacing them all together. No human artist would want to generate images for $0.13 per image.

The AI could be introduced in many parts of the workflow to make image generation easier, faster and less expensive. Adobe Creative Cloud, presentation applications like Google Presentations or Microsoft Powerpoint, will all include this sort of functionality, I would guess by early next year. And cost value drivers are likely to be important if there is an economic slowdown.

Optionality

One of the most fascinating aspects of AI content generation is that it allows one to rapidly generate, explore and evolve options. The design space and our ability to explore it is massively expanded. Think about the workflow:

Create a prompt

See the imagesTweak the prompt (multiple times)

Choose an imageGenerate variations of the image

Edit the image

Download the image

Use the image

One could move back and forth between steps 1, 2 and 3 many times, possibly generating 100s of images, at $0.13 each, to relatively quickly generate the image you need.

In other words, for this use case, AI content generation is a disruptive innovation against photo libraries.

Optionality value drivers require more experience and data to quantify, but they connect to resilience and adaptability and are slowly gaining recognition as an important part of some value models.

How would you price Dall-E and other AI content generation applications?

Open.ai’s current approach to pricing makes sense early in category creation. To review, they have two pricing models that I am aware of:

People generating images at $0.13 per image

Embedding the API in other applications at$0.16 - 0.20 per image depending on resolution

Note that the API cost is higher per image generated. This helps Open.ai keep its options open and prevent cannibalization while it works out its business model.

Over time, I expect more business focussed solutions to be built from content generation AIs. As this happens, pricing models are likely to change. There are four possibilities depending on the business value being created and how easy it is to quantify that value.

Input based pricing models, where the volume and complexity of input determines the price (Open.ai uses this for its model business today).

Output based pricing models where the price is based on an estimate of the value (this is kind of what Open.ai is doing today for Dall-E).

Outcome based pricing models, these will be used in areas like SEO and pipeline optimization. Outcome based pricing models will be used anywhere there is enough data to build causal models and quantify outcomes in a way all parties can agree on.

Revenue sharing pricing models, these are a type of outcome based model, but in this case the parties share risk and revenue over time.

These four types of pricing models are likely to become more common in AI generally, and to replace the current approaches taken by Amazon, Microsoft and Google which tend to be resource based (a fancy way of saying cost plus).

Over time, I expect some form of outcomes based pricing to win in most categories. The promise of AI is better predictions. The barrier to outcome based pricing has been the difficult of prediction and the challenge of untangling causal relations. AI should help to address both these objections.

Dall-E, Ownership and Bias

Ownership of images generated by an AI

Some of the revenue models are going to turn on ownership of the image generated.

On November 5, 2022 this was Open.ai’s policy.

You own the generations you create with DALL·E.

We’ve simplified our Terms of Use and you now have full ownership rights to the images you create with DALL·E — in addition to the usage rights you’ve already had to use and monetize your creations however you’d like. This update is possible due to improvements to our safety systems which minimize the ability to generate content that violates our content policy.

So you can download what ‘you’ ‘create’ and claim ownership. You could even try to sell the images, maybe as an NFT (Non Fungible Token) and become part of that bubble.

For more on the ownership of AI generated images see Who owns DALL-E images? Legal AI experts weigh in.

So far so good, but are these systems violating other forms of ownership? One of the most powerful, and engaging, ways of using Dall-E is to create a prompt ‘XYZ in the style of ABC’ (see the examples below, ‘a quiet lake in the style of Miro, Dali, Lee Ufan, Emily Carr). One of these people, Lee Ufan, is a living artist.

What if I tried a prompt ‘a fat human like pig in the style of Miyazaki, Pixar, Disney.’ Miyazaki (Studio Ghibli, Disney and Pixar are all aggressive about protecting their intellectual property and style. They are not likely to accept this use.

Bias in AI generated images

And then there is the question of bias. Dall-E seems to be much better at generating images influenced by European and American art, animation and illustration. It does less well with indigenous, Asian or Latin American themes. Perhaps this will self correct with time. But there are a lot of feedback loops built into these systems. A GAN basically structures feedback between two competing AIs. These systems tend to be what is called Barnesian performative, in other words, the more they are used the more they come to define the norm, and the range of what is considered, and what is thought possible, narrows rather than expands.

See: AI art looks way too European and the example below of the Japanese suiboku painter Sesshu.

AI content generation is a ‘brave new world’ in both Shakespeare’s sense (The Tempest) and Huxley’s (Brave New World).

4. Pricing and Generative AI

Copywriting and text generation is one of the early use cases for generative AI. There have been a number of product announcements in this area in the late spring of 2023. A lot of money is being invested.

So, how will the value being created get captured so that these innovations are sustainable?

8 Ways to Capture the Value of Generative AI

The 8 ways to capture the value of a B2B SaaS innovation are by…

Growing the market by bringing in users who would not have used the application without the new functionality

Capturing market share by winning over customers using competing solutions

Keeping customers who might have otherwise left

Increasing use which if you have usage based pricing will increase revenue

Increasing the price of the existing service, in other words, as the justification for a price increase

Offering the functionality as an optional extension that users must pay for

Using the functionality as a fence that pushes people to buy a more expensive package in a Good Better Best style package design

Packaging the functionality as a new offer and taking a new product to market

A Simple Framework for Generative AI Pricing Strategy

Choice of pricing metric for generative AI will depend on:

Overall Strategy

General Product Strategy

Product Strategy for the Specific Product

By working through this cascade, you can start to think through how you will approach pricing AI. There are predictable patterns here. Most companies will be conservative.

Establish a defensive positioning to buy time (I think this is what most of the large companies are actually doing)

Enhance existing value drivers for existing customers

Bundle AI with other functionality

That is what a lot of the announcements we are seeing amount to.

Some companies will go farther, Microsoft and its full throated adoption of OpenAI is an example.

Enhance current positioning

Create new value drivers for existing customers

Offer AI as a priced product enhancement

A few companies will be focused on category creation. Many of these will be startups, but a few large companies will also play.

Create a new positioning

Create new value drivers for new customers

Offer AI as a separate product

Of course there are other ways to align general strategy with general product strategy and specific products, but I suspect these will be the three most common patterns.

One can then map the 8 Value Capture Tactics to the three strategies.

Two Generative AI Startups and their Pricing

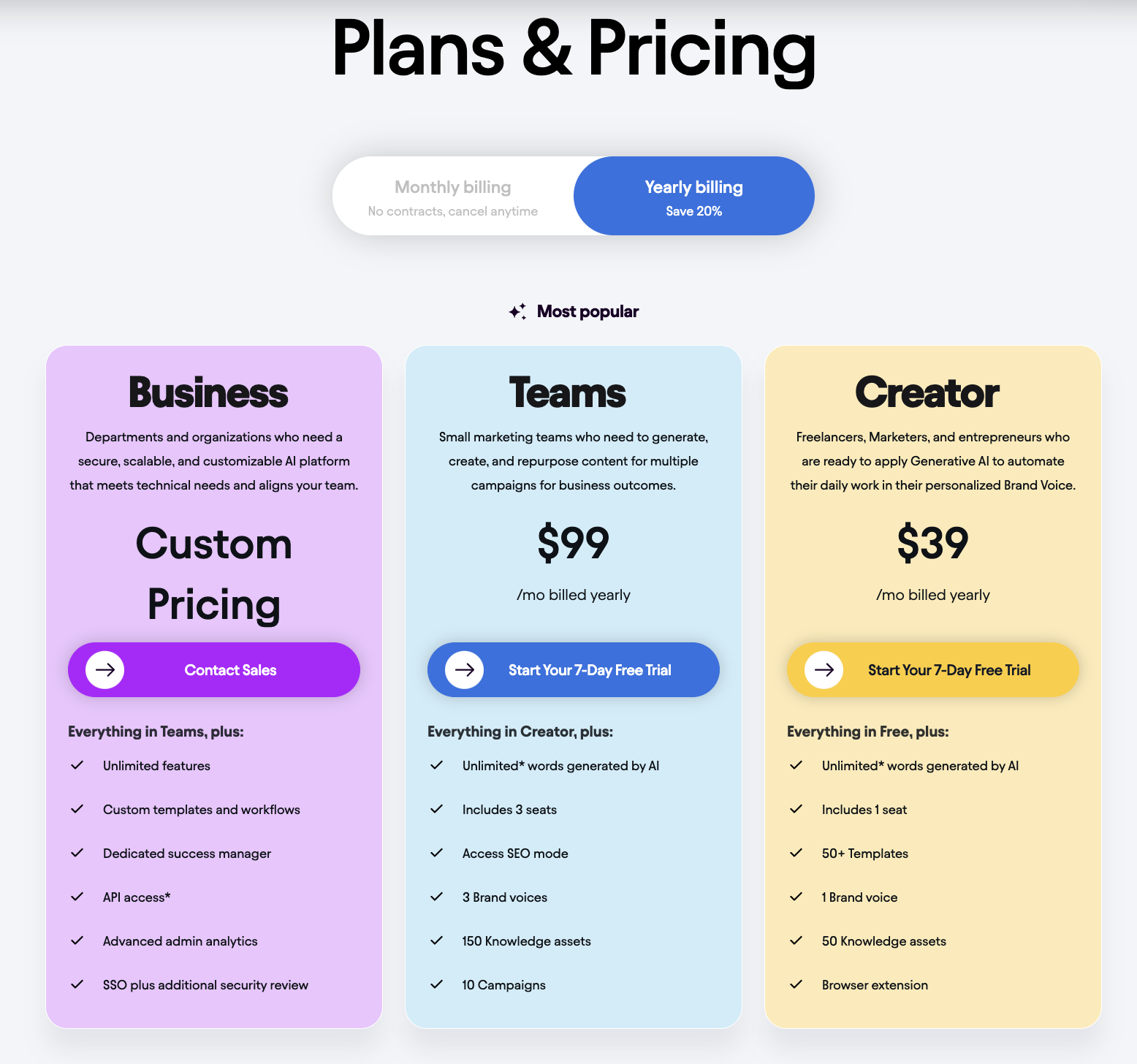

Jasper and Copy.ai are early entrants into the rapidly emerging Brand and Copywriting category. Let’s take a look at their value propositions and pricing.

Jasper helps you create on-brand AI content and is not limited to text generation. Images are also included. The key differentiator here is that Jasper will speak in your brand’s voice (and implicitly, if you don’t have a brand voice, then the AI will develop one). You can set the tone: Cheeky, Formal, Bold, Pirate. More importantly, you can train the AI on your own content so that it will learn how to write about your brand.

Jasper pricing has two priced tiers and a business tier. It combines product-led growth (for Teams and Creator) and sales-led growth (for Business). Packages are fenced by the number of users, number of brands, knowledge assets, campaigns and integrations. Seems a bit complex to me.

Interestingly, there is no limit in the number of words generated by the AI. Contrast this with LLM model vendors like Open.ai and Cohere, which charge for the number of tokens input and output (we are working on post comparing Open.ai and Cohere’s pricing).

Jasper also makes value claims on its website.

It is hard to evaluate these claims without more data. The 3.5X return on investment is interesting (though low for a new innovation where 10X is more common) but one wants to know what factors are driving this ROI. A value management system like Ibbaka Valio can do this.

If one can trace the connection from content download to actions that generate revenue you could build a value driver from content downloads. It is this sort of value driver that is likely to drive Jasper’s adoption. Revenue value drivers are more powerful than cost value drivers in most use cases.

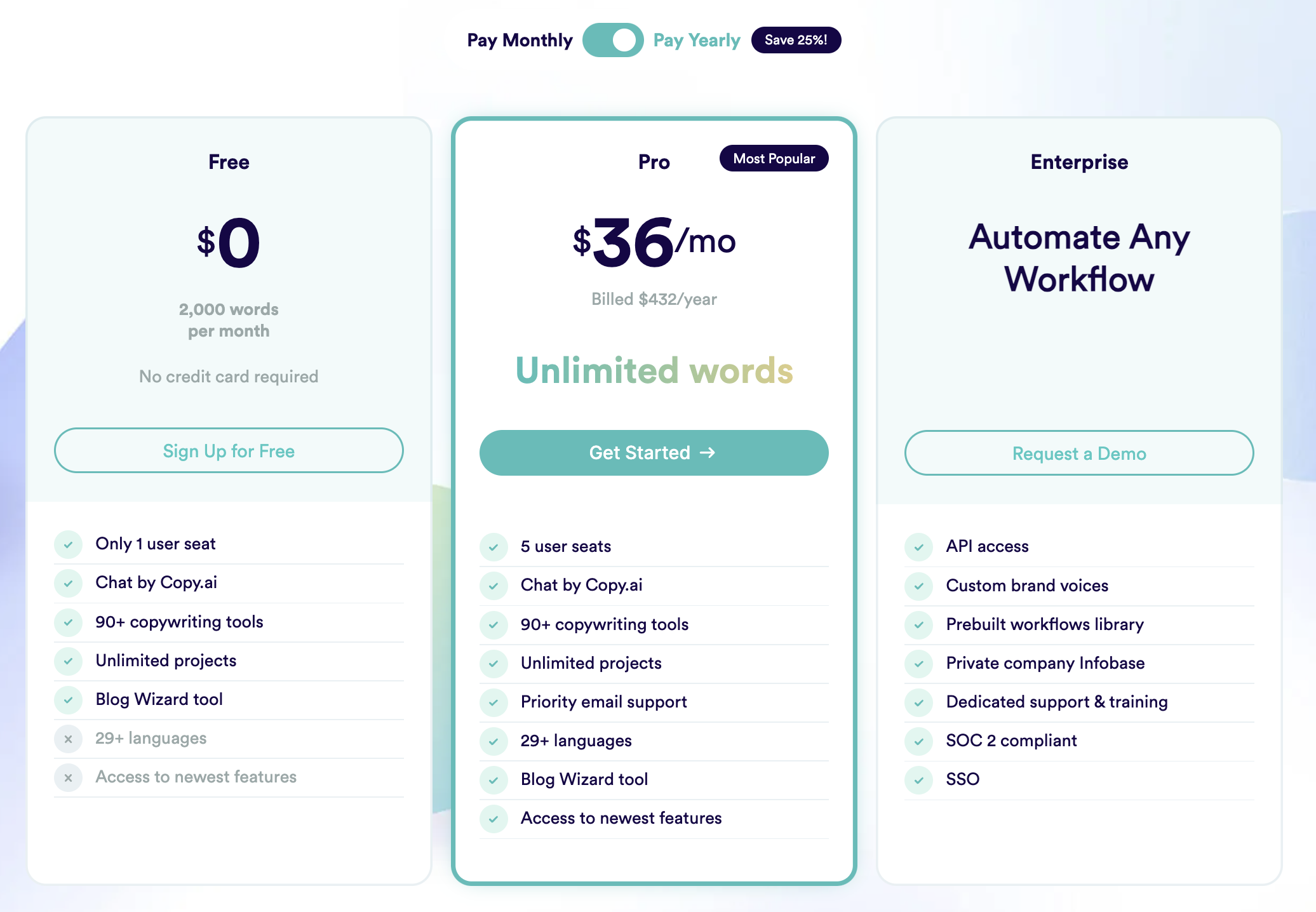

Copy.ai is also part of the emerging category of AI copywriting. There key value promises are:

These are very similar to those for Jasper. One of the signs that a new category is cohering is that different vendors have similar value propositions. The difference here is that Copy.ai has mapped value propositions to personas: ‘Write blogs 10X faster’ for Blog Writers (I am skeptical of this claim) and ‘Write higher converting posts’ for Social Media Managers. If one can quantify the value of conversions this can be a compelling value driver.

Let’s look at the Copy.ai pricing page. The pricing metric is opaque and has to be constructed from the different fences. The fences are ‘words per month,’ ‘user seats,’ and various functionality points.

The Enterprise package seems almost like a different application with its focus on workflows, SOC-2 compliance (a lot of companies are concerned about the security implications of AI adoption). Note that ‘Custom brand voices’ comes in as a special feature of the enterprise package; for Jasper this was a core value proposition across all packages.

Should copywriters be worried?

One of the current concerns about Generative AI is that it will take away jobs. Visual Capitalist has a good infographic on this based on research from Open.ai GPTs are GPTs: The Labor Market Impact Potential of Large Language Models. Writers and authors are one of the groups with high exposure.

In their current state, Jasper and Copy.ai are meant to complement skilled humans and not to replace them. One would not want to rely on these systems to write finished copy in the brand’s voice. But they are likely to get there. And this will reduce the number of jobs for writers as writers. They may have new jobs as prompt engineers, but that is a different skill set with different emotional qualities.

Who is going to read all the content that these systems are generating. If a writer becomes 10X more efficient are they going to write 10X more content? I hope not. Who would read all that content? Other AIs I suppose.

Writing is one of those skilled trades where the best writers are orders of magnitude more impactful than the average writer. Perhaps AI will raise the quality of writing rather than lead to the generation of more writing. It would be nice to read more well written articles and I would like to write better myself.

From a business point of view, this sounds like a Red Queen game. A Red Queen game is one in which everyone in an industry improves, the improvement becomes table stakes. Not much changes when it comes to differentiation or positioning. The idea comes from Lewis Carroll’s Alice in Wonderland. ““My dear, here we must run as fast as we can, just to stay in place. And if you wish to go anywhere you must run twice as fast as that.”

Red Queen games are good for providers. We will all have to adopt these tools. But for the rest of us, it is going to be a lot of work to keep up!

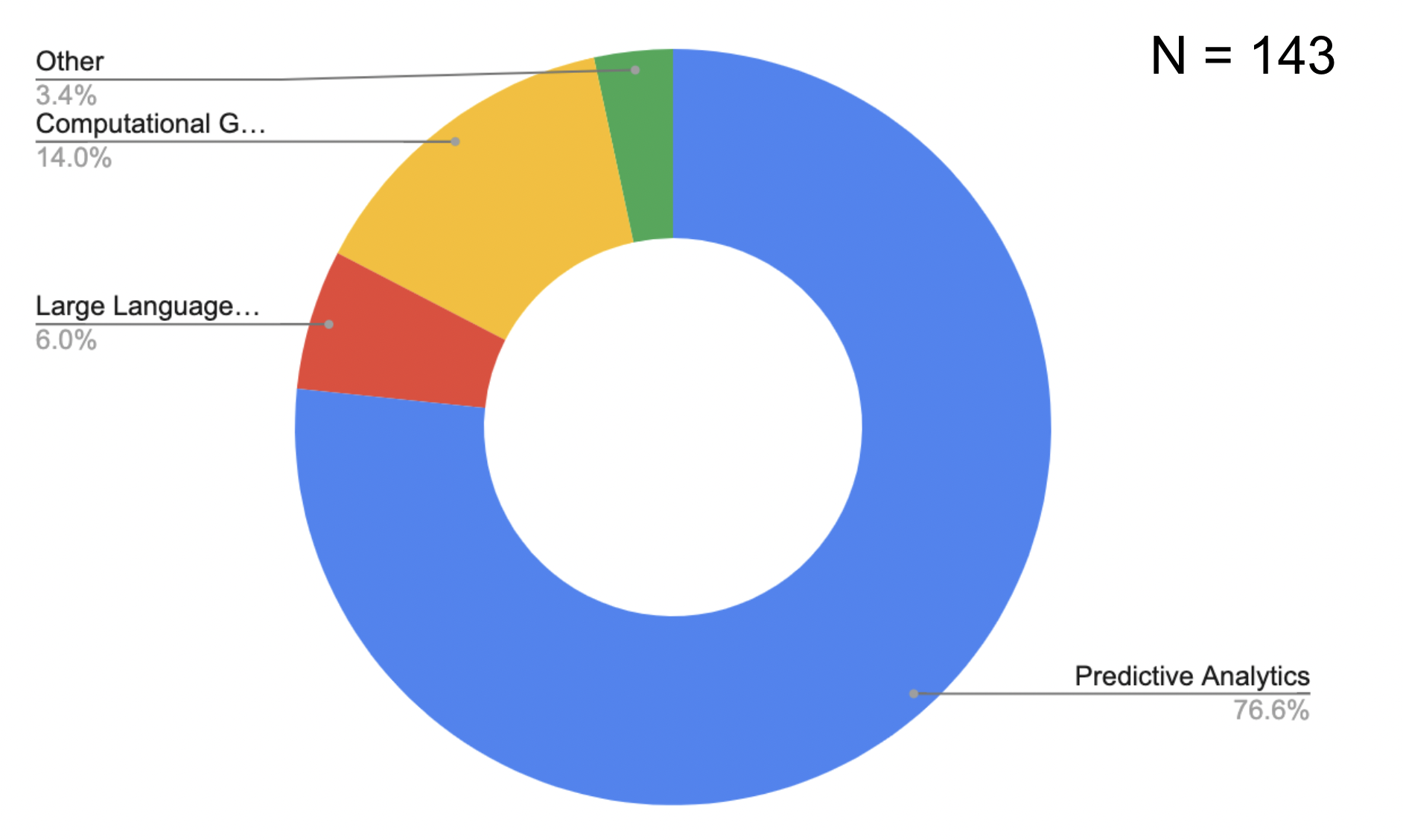

5. What flavor of AI will be used in pricing?

We are in the middle of an AI renaissance, sparked by the enormous popularity of ChatGPT and its many applications. AI is not new to the pricing space. The classic old heavy metal pricing applications - PROS, Vendavo and Zilliant, have been using advanced predictive analytics from their first solutions, which in the case of PROS goes all the way back to the mid-80s. Their cloud competitor, Pricefx, also has some powerful approaches to optimizing prices based on a mashup of internal and external data.

But there has been a sea change over the past year, driven by the scale and power of new approaches.

We reached out to the pricing community recently to ask…

Which approach to AI will be most relevant to pricing over the next three years?

Predictive Analytics - 76.3%

Large Language Models like GPT - 6.0%

Computational Game Theory - 14.0%

Other - 3.4%

Predictive Analytics and Pricing

This is the established approach to using AI in pricing, and as the above poll suggests, most pricing people are comfortable with this. All of the major pricing software packages make use of this form of AI and are migrating their platforms to build their predictive models using machine learning generally and deep learning specifically.

Deep learning is the approach to AI led by Geoffrey Hinton at the University of Toronto and Yoshua Bengio at the Université de Montréal.

Using predictive analytics for load balancing and price optimization is well established. The question to ask is how we can extend this approach. Some of the ideas being discussed put pricing in a wider context.

Predictive Engagement - can we predict the future engagement of users on software platforms? If we can, then we can use this to make usage based pricing more predictable, which would remove one of the common objections to this approach to pricing.

Renewal Probability and Price Optimization mashups - SaaS businesses are obsessed with renewals as it underpins the business model and customer lifetime value. Customer satisfaction platforms look at usage, CSAT (Customer Satisfaction) and other data to predict probability of renewal. Could pricing analytics be combined with user analytics to improve predictions of renewals.

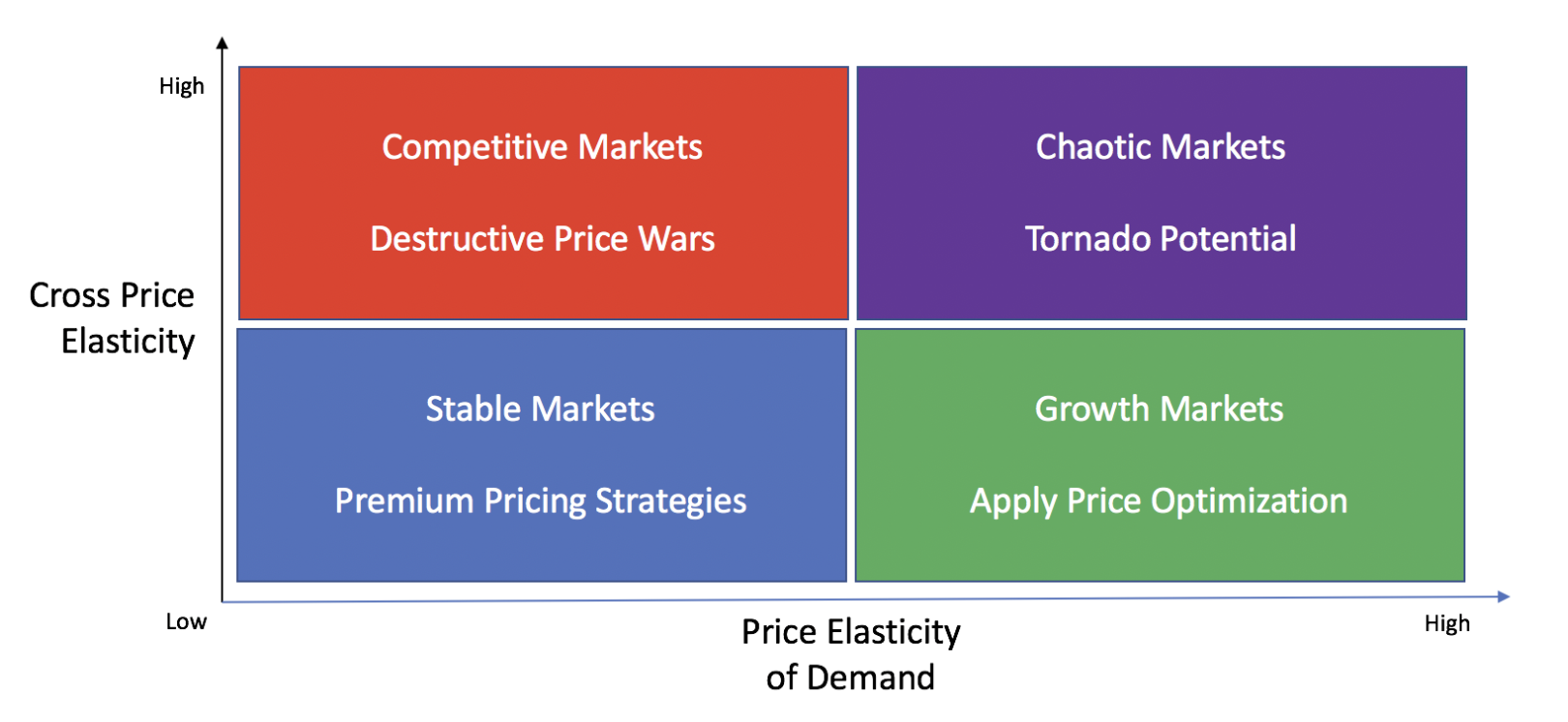

Market Dynamics - there are complex interactions between price elasticity of demand and cross price elasticity (the probability that a customer will defect to a competitor in the case of a price increase). Conventional pricing software has not been very good at modeling and predicting these interactions, which can be chaotic systems. It is possible that Deep Learning will do better, especially when used in Generative Adversarial Networks or GANs (see below).

Computational game theory and pricing

One of the most exciting areas of AI is computational game theory or algorithmic game theory as it is also known.

Definition: an area in the intersection of game theory and computer science, with the objective of understanding and design of algorithms in strategic environments. See Computational Game Theory

One of the galvanizing events in recent AI research was when DeepMind’s AlphaGo defeated human champion Lee Sedol in 2016. Chess had been solved earlier, as early as 1997 when IBM Deep Blue defeated Gary Kasparov, but Go was thought to be a much harder problem. DeepMind (now part of Alphabet) trained AlphaGo on games played by human masters, but a follow up program AlphaGo Zero was trained by playing games against itself in an interesting implementation of a Generative Adversarial Network (see below). AlphaGo Zero is even more powerful and adaptive than the version trained on human data.

Some people took solace in the idea that Chess and Go are games of perfect information. They believed that AIs would struggle to win in a game like poker or contract bridge, where some information is hidden and psychology plays a big role. The confidence was misplaced.

In 2017, an AI Liberatus defeated a cadre of top poker players. It defeated them so badly that all four of the humans ended up down with Liberatus taking the entire pot.

The lead designer Tuomas Sandholm said that his team “designed the AI to be able to learn any game or situation in which incomplete information is available and "opponents" may be hiding information or even engaging in deception.” Does that sound like a lot of pricing situations to you?

There are still some challenges to using computational game theory for pricing, at least for the approach to pricing that Ibbaka advocates.

Positive sum games - the most effective pricing creates positive sum games in which buyers, sellers and even competitors can all win - this is still a hard problem for AIs built on finding the Nash equilibrium to solve.

In many pricing situations there are multiple players, which is harder than two player games (but this problem is being solved as the success of Liberatus shows).

See: Important future directions in computational game theory by Sam Ganzfried.

Computational game theory is also being applied to evolutionary systems. See Steering evolution strategically. Pricing and packaging is a complex adaptive system embedded in several larger complex adaptive systems. Long term, computational game theory and computational evolution will be one of the best ways to model pricing strategy and design. But the question we asked gave a three year time horizon. I think computational game theory is on more of a ten year time horizon. Something to begin studying and to explore but probably not the place for big bets at this time. I hope I am proven wrong.

Large language models (LLMs), content generation and pricing

Many people are skeptical about the application of LLMs like GPT-4 to pricing. There are many stories making the rounds of ChatGPT hallucinating or giving the wrong answer to technical questions.

Two thoughts on these objections.

Hallucinations are the tendency for LLMs to invent answers or facts that are not in its source materials. Humans do this too. I suspect it is essential to any creative production and that if we want LLMs to contribute to creative work they will continue to hallucinate. This is actually one of the generative properties of language itself.

I am not impressed by people tricking ChatGPT into giving a wrong answer. Any tool can be badly used. We need to develop skills in the design and management of prompts and See What skills do I need to use ChatGPT?

Let’s take a step back. What is a Large Language Model?

One of the best explanations comes from Stephen Wolfram in What is ChatGPT Doing … And Why Does It Work. LLMs are large (very large, GPT-4 has about one trillion parameters) models built from text and other information on the Internet. GPT stands for Generative Pre-trained Transformer, which is a good description of how it works. Generative in that it can generate new content. Pre-trained in that one needs to build (train) the model and once trained it is static. Transformer refers to the architecture, which was first reported in 2017 by Google Research: Attention is All You Need. Given a set of inputs (prompts) an LLM generates as set of outputs. Open.ai prices both inputs and outputs. See How Open.ai prices inputs and outputs for GPT-4.

LLMs are language based, but language is much wider than we sometimes think. Software code is a kind of language. One way of thinking about mathematics is as a language. Logical reasoning is a kind of language. Value and pricing models, as sets of equations, are also made of language.

With the right set of prompts (the inputs to a model that generate the outputs), implemented sequentially and recursively, I believe that LLMs can generate the following:

Value propositions

Value drivers and value driver equations

Pricing models

Price levels

Price strategies in competitive situations (long term this will be done by applications built using computational game theory, but short term LLMs will provide a bridge solution)

To make this work will require a few things that are still being developed.

LLMs will need to be augmented with data from pricing applications, including value proposition and value driver libraries, pricing model libraries and the systems of equations used in pricing work (there is a lot of math under the covers in pricing). This means that new ways of representing these data structures that are digestible by the systems generating LLMs may be needed. One way to do this will be with an open source LLM. LLaMA may be one place to explore this.

Prompt management systems will be needed. Value model development and pricing model design rely on many different inputs. Outputs are often repurposed as inputs. The order of operations helps determine the results. Software to manage prompts and to optimize them will be needed.

Evaluation and assessment is part of using an LLM. Assume the outputs have some errors or hallucinations and that a formal validation process is needed.

Ibbaka has its work cut out as it moves to leverage LLMs in its pricing and customer value management platform Ibbaka Valio.

Generative Adversarial Networks (GANs) and pricing

Not covered in the poll were Generative Adversarial Networks or GANs. GANs pit two AIs against each other so that each will improve faster. AlphaGo Zero mentioned above is a special case of a GAN.

This architecture will become standard in training the AIs used in pricing.

GANs are already being used to train algorithmic trading programs. Alexandre Gonfalonieri covers some business uses in Integration of Generative Adversarial Networks in Business Models.

One can think of many ways to use this AI architecture in pricing applications:

Have pricing strategies compete over time and test impact on market share, revenues, profitability.

Generate different pricing metrics and combinations of pricing metrics and test for performance against different market structures (and then feed the results back in so that the market structure evolves).

Model market dynamics to understand how price elasticity of demand and cross price elasticity interact.

GANs will be combined with other AI approaches to accelerate and automate development.

Social Network Analysis (SNA) and pricing

Social Network Analysis (SNA) is a branch of network science that asks how graphs are connected. Ibbaka has been applying this for several years to find clusters in the data we use for market segmentation, value segmentation and pricing segmentation. Segmentation is the foundation of good pricing. One wants to identify clusters of users that get value in different ways and then target the segments where you can deliver the highest differentiated value at a reasonable cost.

One of the keys to AI is developing ways to represent data that are amenable to AI. Graphs are one way to do this.

One can represent the many different pieces of data used in value and pricing analysis as a graph (a set of nodes and edges connecting them) and then use network science techniques to understand how value and price are connected, or how customer or user characteristics and behaviors shape willingness to pay or probability of upgrade or renewal. SNA is just one of many relevant approaches. One could use percolation to understand how price changes will be accepted in a market, measures of network centrality to understand how to connect value paths, and so on.

Thamindu Dilshan Jayawickrama gives a good overview of community detection in Community Detection Algorithms.

Conclusions: Leveraging AI in Value Modeling and Pricing Design

Over the next 3 years, AI will completely transform how value models are developed, pricing models designed, the impact of pricing actions assessed and how pricing strategies are implemented.

Predictive analytics alone will not be enough to do this. Other approaches including the use of Large Language Models and Computational Game Theory will be required. New ways of representing and connecting data and models will open new technologies and drive innovation.

Ideally, this transformation in how we price will move us away from pricing as a zero sum game and move us to positive sum games where buyers, sellers and competitors all benefit from better pricing design and execution. See How to negotiate price (getting to positive sum pricing).

6. AI Pricing Studies on: The Reddit API Pricing Kerfuffle

TL:DR The pushback on Reddit’s API pricing has lessons for all of us:

Align price with value, if users are getting value in different ways consider different approaches to pricing (different pricing metrics, different pricing levels)

Respect your users and community and the value they contribute to your data, make sure that you have the rights to use the data in the ways the API access enables

Make users and customers part of the pricing design process by engaging them around value

Communicate early and often and remember that communication is two way and is only taking place if you are listening as well as speaking

Most of us participate in one Reddit or feed or another. It is a place where many important conversations take place on every topic imaginable. Reddit covers more things than I can imagine. It has become an essential part of many communities. And I charmed that Reddit was originally written in my favorite language, Common Lisp (there are good subreddits on Lisp), though it was quickly moved to Python.

Given Reddit’s importance, many of us are concerned about the recent kerfuffle over Reddit API pricing and were disturbed by the Reddit blackout on June 12 many subreddits went dark and, as of writing, remained dark. People are upset for a number of reasons, but the quick version is

The new API pricing if enforced will force some services such as the Apollo App to shut down - see Apollo’s Christian Selig explains his fight with Reddit — and why users revolted

The community feels that it is contributing the data that Reddit wants to monetize

The community feels that the API enforcement and pricing were sprung on them without consultation or time to prepare

Why did Reddit feel the need to monetize API access?

Manage API usage

Monetize use of data (especially for use in Generative AI and Large Language Modles)

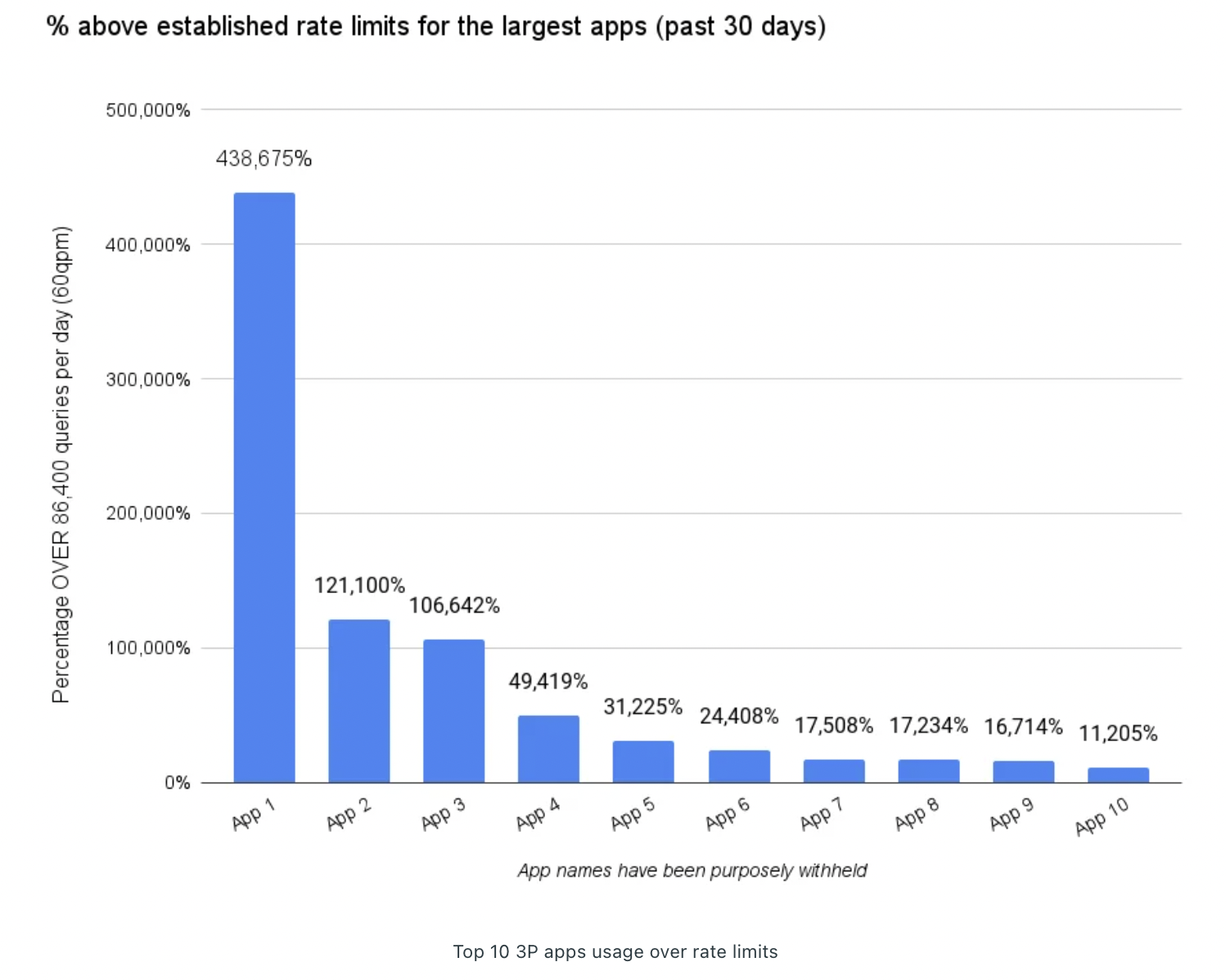

Reddit had good reason to implement API pricing. And they did say what these are. For example, API Update: Enterprise Level Tier for Large Scale Applications provides a good version of the story from Reddit’s point of view.

Manage API usage

The first reason is that some applications were well over the limits and Reddit.

Reddit could have just capped access, but this would have crippled a number of third party apps and would not have been in anyone’s interest. A pricing mechanism to regulate access makes sense.

Monetize use of data

A second reason to monetize API access is that some companies have been using this as a way to feed Large Language Models (LMM). There is a general concern that the LLM companies like Open.ai, Google, Nvidia, Meta, Stability AI and so on are taking advantage of open access to data to create wealth that should be shared with the organizations (and people) providing the data used to train these models.

Reddit should be monetizing APIs and revenue from API access can fund ongoing innovation that will benefit all Reddit users.

What went wrong with Reddit’s API pricing?

Given the reasons Reddit has given, and the emerging consensus that people should be compensated for the value of their data, what went wrong for Reddit? There are four root causes.

Price and value are not aligned for different API users

The community believes that Reddit is monetizing data that it has contributed

Communication was not as clear and consistent as it could have been

Conspiracy theories were triggered

Price and value are not aligned for different API users

Reddit has many different organizations accessing its APIs for many different reasons. It is unlikely that one pricing model will fit all.

Some organizations have pointed out that under the new API pricing they would be paying more to Reddit than they take in. Apollo has been especially vocal about this (see the above link) but as many people accessing the API are communities, nonprofits, small organizations, getting only small incremental value and so on.

Reddit has been trying to address this with carve outs and exceptions. This is a sign that the pricing model is broken. It confuses the market and encourages people to shout out ‘Hey, what about me, I’m special too.’

The first step before changing pricing is to do a value-based market segmentation. A good value-based segment is

“a group of current or potential users that get value in the same way”

To do this in a way that is useful for pricing design you need to have a formal value model. See SaaS pricing is model driven.

Pricing then needs to be designed to align price and value for the target segments (there are almost always some edge cases that cannot be properly priced, but one can generally cover more than 90% of the potential market).

The community believes that Reddit is monetizing data that it has contributed

The data that is accessed through the API was, at the end of the day, generated by users. The users see themselves as part of communities. They did not engage with Reddit to create data for others to monetize. Pricing API access became a flash point for this concern.

Reddit is not the only place this is happening. The rise of Generative AI and the way that Large Language Models (LLMs) are being built has made this a hot topic for content creators of all kinds.

This is an unsolved issue, but one that we all need to work together to solve, and not just at Reddit. Think about YouTube. YouTube is probably the best source of data to train Generative AI models for video and to pull text from videos into LLMs. Long term, this could disrupt people creating the videos. Is this a use of video content that was contemplated when content was uploaded to YouTube? See Why YouTube Could Give Google an Edge in AI on The Information (sorry, behind a paywall). Another relevant article from The Information that touches on this The Law is Coming for AI but Maybe Not the Lay You Think.

Reddit, with its active and engaged communities could be one place where we solve this problem.

Communication was not as clear and consistent as it could have been

One of the underlying issues is that the Reddit community feels they were not heard, or respected. No doubt Reddit feels this unfair. They have been communicating about this for a while now. This post, An Update Regarding Reddit’s API came out more than two months ago and there was a lot of community discussion.

But it is the perception that matters here and the perception is that

Ths was imposed without real consultation

It is not fair as the price is not aligned with value and the value is not shared

It is not fair as the value is created by the community

Reddit probably needed more time to develop the value model and value segmentation needed to develop the API pricing and communication needed to be part of that design process. Making customers part of the pricing design is an emerging best practice.

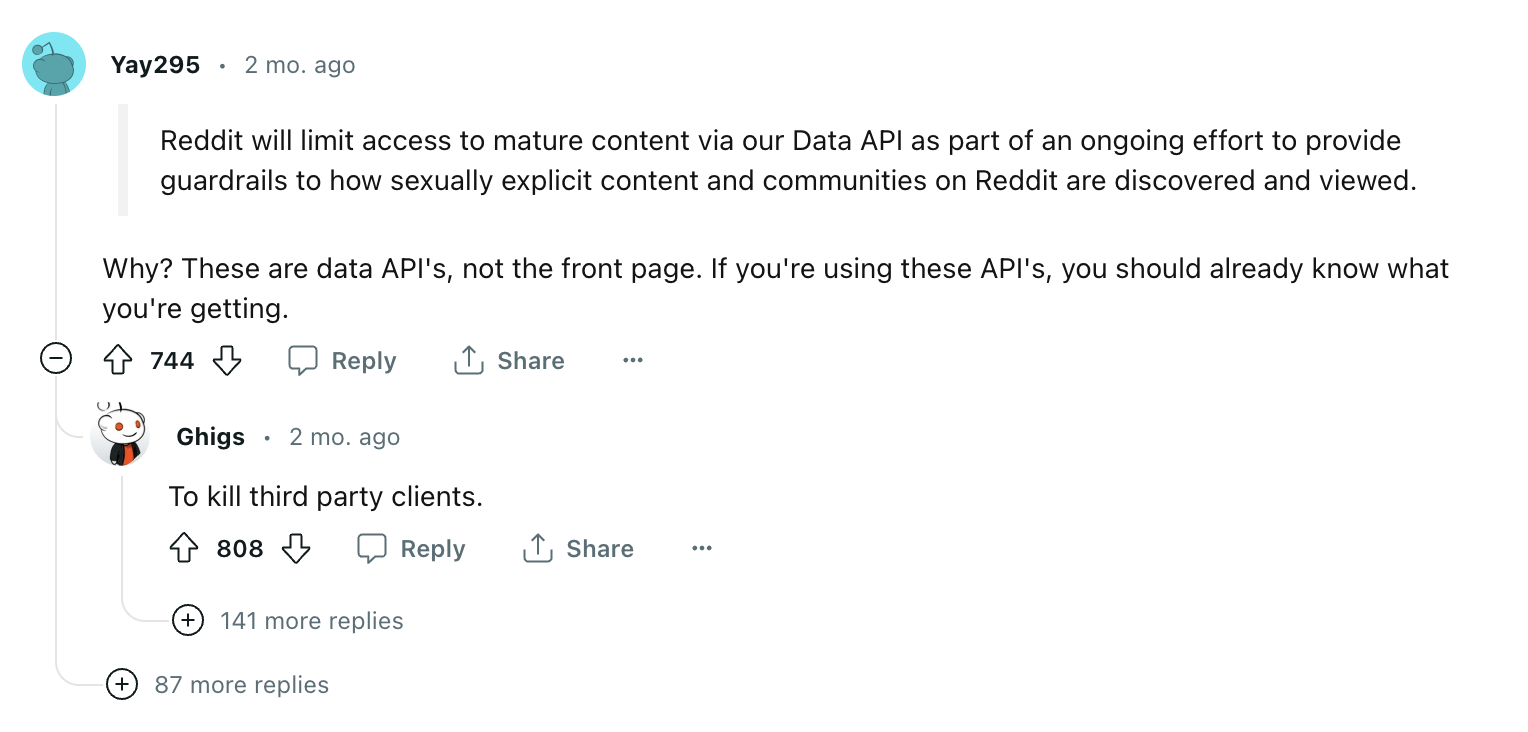

Conspiracy theories were triggered

If you read through all the comments from An Update Regarding Reddit’s API you will find conspiracy theories surfacing. The most common one is that Reddit’s intention is to kill off third party apps. Personally I don’t believe this, but there are people who do. The following is from the above post.

Part of this reflects people’s experience with Twitter. Twitter’s motivations and decisions were always a mystery to me, maybe that was what Twitter was doing, but I don’t think that is Reddit’s intention.

Pricing can be used to shape behavior and this can be an important part of pricing design. But Ibbaka’s experience is that you need to be transparent about this. If Reddit, or anyone else, wants to shutdown third-party vendors, or narrow things down to a set of partners that provide value in specific ways, then they should be clear on this and not try to do it indirectly with pricing metrics or pricing levels.

What can we learn about pricing design and the introduction of pricing changes from Reddit’s experience?

Many companies are implementing API strategies and will need to price API access.

Many more want to know how ‘their data’ (is it ‘their data’ or ‘their customer’s data’ or ‘the user’s data) in a world where AI is making data of all kinds much more valuable.

Given this, what can we learn from the Reddit experience?

Begin by segmenting API users by how they get value, to do this you need a value model

Design pricing for each segment, one size will not fit all

Be transparent about what you are doing and why

Show that the pricing represents a fair distribution of value between parties (be able to say what the value ratio is and price to the target value ratio)

Engage with customers are part of the pricing design process (do this by framing the conversation around data)

Have clarity on data ownership and rights, don’t assume that you have rights to do things with data that were not imagined when the original agreements were put in place

AI Pricing Studies on: How OpenAI prices inputs and outputs for GPT-4

Many companies are looking at how to use Open.ai’s GPT-4 to inject AI into existing solutions. Others are looking to build new applications or even whole new software categories on the back of Large Language Models (LLMs). To make this work we are going to need to have a good understanding of how access and use of these models will be priced. Let’s start with the current pricing for GPT-4. It is possible that this pricing will shape that of other LLM vendors, at least those that provide their models to many other companies. Or pricing may diverge and drive differentiation. It will be interesting to see and will cannalize the overall direction of innovation.

In April 2023, GPT-4 was available in two packages and has two pricing metrics.

The pricing page is shown below. In addition to GPT-4, Open.ai publishes pricing for Chat, InstructGPT, model tuning, Image models and Audio models. Many real world applications will combine more than one model. The interactions between the different pricing models will be important, but let’s start with the basics.

Before diving into the pricing, one needs to understand the key input metric, tokens. Tokens show up all over the place in LLMs and ‘tokenization’ is the first step in building and using these models. Here is a good introduction.

A token is a part of a word. Short and simple words are often one token, longer and more complex words can be two or three tokens.

It can get more complex, but that is the basic idea. Open.ai bases it price on tokens. This makes sense at this point. It allows them to price consistently across some very different applications. Other companies could learn from this. One place to start to think about pricing metrics is at the atomic level of the application, whether this is a token, an event, a variable or an object and its instantiations.

Open.ai has two packages. They are based on ‘context,’ 8K of context and 32K of context. Generally more sophisticated applications solving harder problems will need more context.

The pricing metrics are the Input Tokens (in the prompts) and the Output Tokens (the content or answer generated).

For the 8K and 32K contexts, the price is as follows:

Input: $0.03 (8K) or $0.06 (32K) per thousand tokens

Output: $0.06 (8K) or $0.12 (32K) per thousand tokens

Note that outputs are priced twice as high as inputs. This may encourage more and larger inputs (prompts), and more elaborate prompts will often provide better outputs.

Why did Open.ai choose to have two different pricing metrics? The two pricing metrics. sound very similar.

The number of tokens input and the number of tokens output could be very different.

Scenario 1: Write me a story

Prompt “Tell me a fairy tale in the style of Hans Christian Andersen where a robot becomes an orphan and then falls in love with a puppet.” (Try this, it is kind of fun.)

Here the prompt has about 20 tokens but the output could have several thousand tokens.

Scenario 2: Summarize this set of reports into a spreadsheet and calculate the potential ROI

Prompts “Five spreadsheets with a total of 20,000 cells and two documents of 10,000 words each.” This works well too, but needs good input data, well structured spreadsheets and a rich data set.

Here the set of prompts would have about 30,000 tokens but the output would have only, say 1,000 tokens.

Assuming both are on the 32K package, the price for each scenario would be …

Scenario 1: $0.001 + $0.30 = $0.301

Scenario 2: $.90 + $0.06 = $0.96

These may sound like small usage fees, but there are companies that are planning to process millions of prompts a day as they scale operations. It adds up.

The two pricing factors interact in interesting ways across different use cases. In many use cases, the output will drive the pricing, but in others, like scenario two, and many other analytical use cases, it is the inputs that will drive the price.

Things to think about. This may be an effective pricing model. One would need to build a value model, a cost model and process a lot of data to really know, but it seems like a good place to start. I assume OPen.ai is doing a lot of this analysis over the next few months and I expect the pricing to change. They are likely using AI to support this analysis and I hope they share their approach.

One reason this model works is because it uses two pricing metrics. Using two pricing metrics, also known as hybrid pricing, is a key to having flexible pricing that will work at different scales and in different scenarios. Most SaaS companies should be considering some form of hybrid pricing.

AI Pricing Studies on: Microsoft puts a price on AI

There were two big events in the generative AI world the week of July 17.

Microsoft revealed its pricing for Copilot, its application of Open.ai’s GPT to MS365.

Meta released Llama 2.0, the next generation of its open source model.

I think the second is the more important announcement, and we will look at the implications in the future, but for now let’s look at Microsoft’s pricing for Copilot. Note that Microsoft is also participating in Llama … Google needs to up its game.

What do you think about Microsoft’s pricing for Copilot?

Please take three minutes to let us know.

How much would you pay for an AI assistant for MS 365 or Google Workplace?

The form of these questions is what is known as a Van Westendorp study. Open.ai did one of these through their Discord server before pricing GPT access at US$20 per month. In doing this, Open.ai set a reference price for Microsoft.

Is Copilot worth more than ChatGPT?

I am not generally a fan of Van Westendorp, or any survey that focus on price rather than value. If I need to get direct willingness to pay insights I find a conjoint survey much more informative. I would not make a pricing decision based on a Van Westendorp survey (and definitely not on a Gabor-Granger study). That said, Van Westendorp surveys can be interesting (so please go back up and take the AI assistant survey.

You will basically be asked 4 questions,

At what price would you consider Copilot to be so expensive that you would not consider buying it? (Too expensive)

At what price would you consider Copilot to be priced so low that you would feel the quality couldn’t be very good? (Too cheap)

At what price would you consider Copilot starting to get expensive, so that it is not out of the question, but you would have to give some thought to buying it? (Expensive/High Side)

At what price would you consider Copilot to be a bargain—a great buy for the money? (Cheap/Good Value)

In fact, Copilot has been priced at a premium. Here is the Canadian pricing for 365. Copilot is more expensive than any of the plans offered in Canada.

For reference, here is the Google Workspace pricing in Canadian dollars.

Google seems to price slightly below Microsoft.

Microsoft Copilot pricing got a lot of press. Here are some of the more interesting articles.

The Financial Times Microsoft to charge $30 per month for generative AI features

The Verge Microsoft puts a steep price on Copilot, its AI-powered future of Office documents

Ars Technica Microsoft 365’s Copilot assistant for businesses comes with a hefty price tag

And to get a broader reaction (which is often negative) check out Reddit Microsoft Announced "Microsoft 365 Copilot" Monthly Pricing Now!

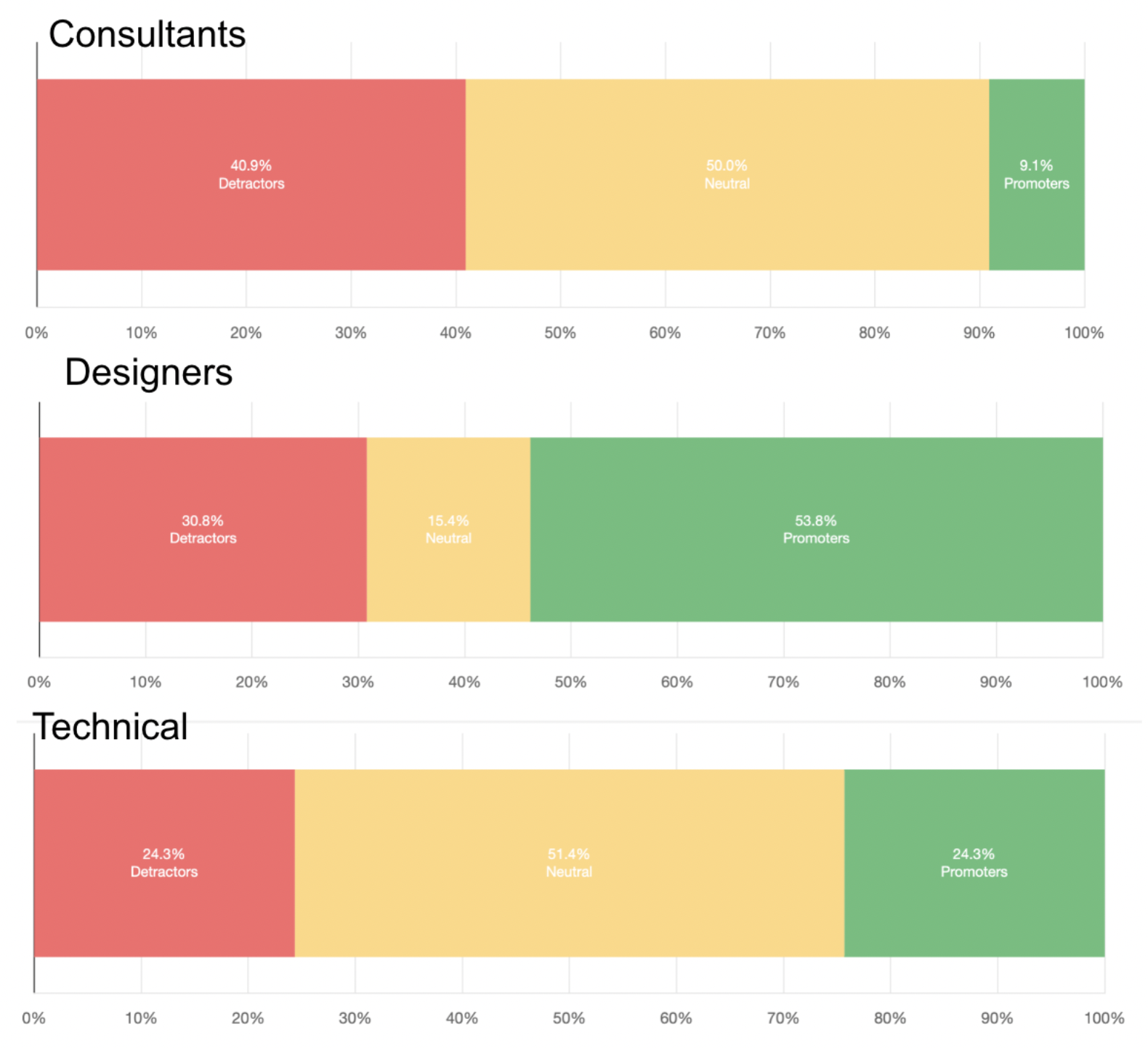

I also asked people in the Professional Pricing Society what they thought about Copilot pricing. There were some interesting responses.

“I think generative AI is getting terribly underpriced because Chatgpt set a bad precedent with little research with the $20 off the gates. The value some industries are deriving is off the charts and paying very nominal amount.”

—

“Generative AI is underpriced because potential users had no reference whatsoever on the value it could provide them, now they have a reference, but users are unsure of their own willingness to pay, so Microsoft is making a cautious step forward, because they aren’t sure either. An instance of adjustment heuristic.”

—

“Depends... the issue is that there is a learning curve to using AI well and have it be actually a net productivity gain. I don't know if your average joe new to LLM and AI tools will be willing to fork out that kind of money for something this new. Feels like more price discrimination and segmentation is necessary.”

—

“It really depends on what it can do I think. Microsoft Office has a history of "smart" features that ended up not very useful. I will definitely try it before deciding whether to buy or not.”

—

“This could be a “value for the user” question.

And a sustainability question.

Value : one would pay more because of the time saved, the comfort of “not doing doing that boring task”, etc… all worth for that person more than 360 $

A Sustainability question too.

True, Microsoft could easily charge more.

For those clients able to afford paying more.

But …

Those technologies are not for elites anymore.

They are so ready, so well designed now that anyone can use it.

Whatever level of education, work etc…

I just dream that the price was set low, so that it makes it accessible for most.

Instead of increasing inequality in efficiency across humans.

I want to call out two points here.

“Feels like more price discrimination and segmentation is necessary.”

I kind of agree with this. There are many different use cases for this AI Chatbot and many different user persona. Pushing too much functionality in will …

overwhelm many users who just want some basic help. These people will get frustrated when exposed to too much functionality. They will not appreciate the value offered and not be able to realise it.

frame the value of advanced functionality at a low level (what some people think Open.ai has already done with ChatGPT)

make it much more difficult to price innovations (both for Microsoft and for other companies).

That said, Microsoft needs to serve a broad market, it cannot afford to have too much nuance. The pricing is definitely at a premium compared to other parts of Microsoft Office.

“I just dream that the price was set low, so that it makes it accessible for most. Instead of increasing inequality in efficiency across humans.”

This is perhaps one of the most important questions facing the technology industry, and society generally.

Will Generative AI and AI generally contribute to further wealth concentration?

What design, ownership and pricing choices do we need to make to ensure that Generative AI is of benefit to all?

How can we make available the ability to train Large Language Models in the hands of everyone who wants to engage?

These questions are far more important than the per user price of Copilot ot ChatGPT. And this is why I think the Llama 2 and Hugging Face theme is more important than GPT and Microsoft.

A few weeks ago, we asked the Design Thinking Group the following question:

How will large language models (LLMs or Foundation Models) evole over the next decade?

We find the vision of a personalized foundation model fascinating, especially for people who do creative work. It is something we are exploring ourselves, and Llama 2 will help us do this.

AI Pricing Studies on: Cohere LLM

OpenAI has taken the world by storm, commanding the spotlight when it comes to large language models (LLMs). Their groundbreaking advancements have captivated the attention of enthusiasts and experts alike. However, they are not alone in this intriguing domain. Other key players like Google (Palm 2), Nvidia (NeMo), and Meta (LLaMA) have also made significant contributions to shaping the landscape. What truly excites us, though, is the emergence of startups as formidable contenders in this field.

Among these rising stars, Cohere stands out. Founded in Toronto, Cohere boasts the expertise of Aidan Gomez, as one of its co-founders. He was a co-author of the influential research paper "Attention is All You Need." This is the research that brought about a revolution in artificial intelligence by introducing the Transformer architecture—the backbone of popular large language models (LLMs) such as OpenAI's GPT-4. Cohere aims to redefine the landscape of enterprise AI.

Cohere offers an important alternative to the LLMs offered by the big hub players. In this post, we look at the use cases that Cohere prices and compare its pricing and positioning to market leader Open.ai.

Where does Cohere AI play in the Ibbaka AI Ecology? The key niches in this framework are Data, Tools, Workflow Management, Infrastructure and Models. Cohere provides models.

There are many ways one could price models. Historically, they tended to be priced based on either the amount of data used to train the model or on the size of the model (which incented companies to build bloated models, but then large language models are not exactly svelte). This has changed with the emergence of hosted, prebuilt models, accessed by many people for even more different reasons.

The emerging pattern for large language models seems to be to price based on the number of tokens in the input (prompt) and the number of tokens in the output. The PeakSpan-Ibbaka survey on Net Revenue Retention (you can still take the survey here) has 15 responses from AI companies so far (out of a total of about 250). A variety of pricing metrics are used, with input and output tokens being the most common. (We will be going into a lot more detail when we analyze this data for the final report, which will be released at SaaStr in September in San Francisco.)

The 5 Cohere AI offers and how they are priced

Cohere has formal support for five different offers or use cases.

Embed: For ML teams looking to build their own text analysis applications, Embed offers high-performance, accurate embeddings in English and 100+ languages.

Generate: Generate produces unique content for emails, landing pages, product descriptions, and more.

Classify: Classify organizes information for more effective content moderation, analysis, and chatbot experiences.

Summarize: Summarize provides text summarization capabilities at scale.

Rerank (Search Enhancement): Rerank provides a powerful semantic boost to the search quality of any keyword or vector search system without requiring any overhaul or replacement.

There is also a free plan for users to test the platform, allowing them to get hands-on experience before integrating it into their products. The free plan provides a Trial API key with specific rate limits for different endpoints. For example, the Generate and Summarize endpoints have a rate limit of 5000 generation units per month, while the embed and classify endpoints are limited to 100 calls per minute.

A Production API key offers a higher rate limit of 10,000 calls per minute.

Embed API Pricing

Pricing Metric: Per Token

The default pricing is $0.40 per 1 million tokens.

For higher token quantities, a pricing option is available at $0.80 per 1 million tokens. Both options deliver optimal performance for sequences under 512 tokens, and you can generate up to 96 embeddings per API call. Note that one has to pay. a higher price for increased capacity. At this point in time, though, few companies will need to use the higher capacity offer.

Generate API Pricing

Pricing Metric: Per Token

The default pricing is set at $15.0 per 1 million tokens.

The custom pricing option is available at $30.0 per 1 million tokens, providing an alternative for users with larger token quantities or specialized requirements. With both options, input and output tokens are charged equally. Again, one pays more for high volume.

Classify API Pricing

Pricing Metric: Per Classification

The default pricing is set at $0.2 per 1,000 classifications.

Each text input to be classified counts as one classification, and there is no charge for providing examples.

Summarize API Pricing

Pricing Metric: Per Token

The default pricing is set at $15.0 per 1 million tokens.

Charges are based on the overall tokens processed, which includes both input and output tokens.

Rerank Pricing

Pricing Metric: Per Search Unit

The default pricing is set at $1.0 per 1,000 search units.

A single search units is treated as a query and up to 100 documents can be ranked in the search result. Documents exceeding 510 tokens (including the length of search queries) are split into multiple chunks, with each chunk considered search unit for pricing purposes.

Comparing Cohere AI and Open.ai GPT 4

Are Cohere’s offer and Open.ai’s offer comparable? That is a complex question as it will depend on the specifics of the use case. Measuring modes by the number of parameters they contain may not give a lot of insight into how useful they are. That said, GPT-4 is a lot bigger, with about one trillion parameters as compared to only 52 billion or so for Cohere.

For those who want to dive into comparing LLMs, the Centre for Research on Foundation Models at Stanford University has a project on this. The project is known as HELM (Holistic Evaluation of Language Models) and currently compares 36 models on 42 scenarios gathering 57 metrics. Ibbaka will analyse the pricing of each of these models in a future post.