On the design of skill surveys

Steven Forth is a Managing Partner at Ibbaka. See his Skill Profile on Ibbaka Talent.

One way organizations try to get a picture of their skills is though surveys. Sometimes these are part of skill management platforms, but more commonly they are done using a tool like Qualtrics or SurveyMonkey. These days, we are even seeing some surveys done in Google Forms or by distribution of spreadsheets by email. Ouch.

Are surveys a good way to get skill insights? Generally no, There are several reasons for this.

Completion rates are low and participation is patchy.

Most of the surveys use some sort of list of skills, based on the assumption that the survey authors know the relevant skills.

There are long gaps between the surveys, which are frequently annual, sometimes quarterly, but skills change much more quickly than this.

The person taking the survey does not get much value from taking it. Sometimes it is an input into a performance review. Most of the people I know do not find performance reviews to be life-enhancing.

There are deeper reasons for the failure of skill surveys and assessments to gather real insights on skills: skills are social, connected and dynamic. Ignoring these properties means that most skill surveys are useless.

Skills are social

Skills have meaning in the context of the work that we do together and our conversations with each other. Taking a skill survey on a laptop does not get to this social nature. Allowing people to suggest skills to each other and to give feedback on each other’s skills can give insights that a simple survey cannot. Even seeing what skills people suggest can give a lot of insight into the skills that are most important to the work done together.

Skills are connected

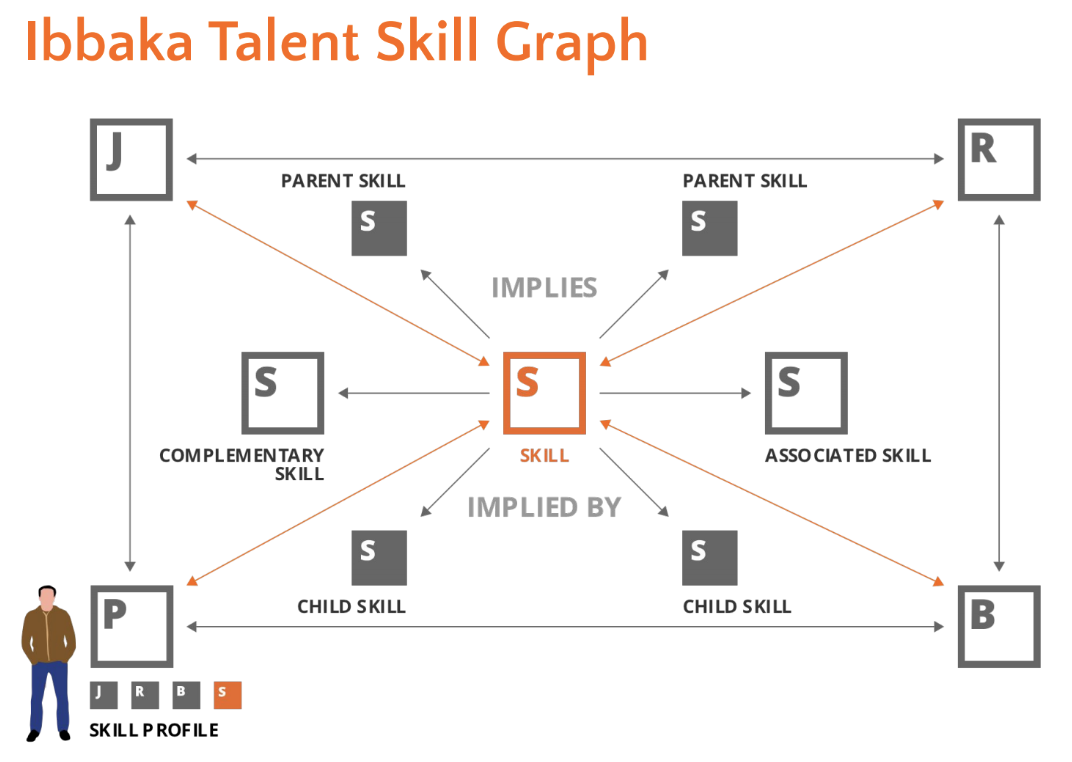

Skills also have meaning in the context of other skills. Ibbaka Talent is built on a Skill Graph that connects skills to other skills, to jobs, roles, and teams.

Skills are dynamic

Skills, and even more importantly, the connections between skills can change rapidly. As a simple example, since the beginning of this year (2020), we have been investigating the use of a Design Structure Matrix (DSM) to connect skills to processes and to better understand the different ways skills are connected. An early glimpse of some of this work has been shared on LinkedIn. This project has generated many new skills for us and created new connections between people.

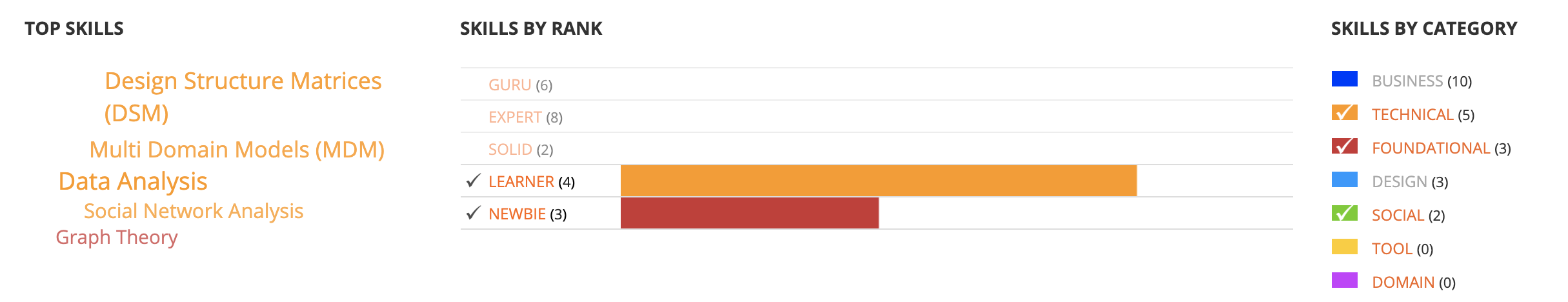

The four Learner Skills and three Newbie skills are new to the team this year.

As more people join the team and we gain experience with Design Structure Matrix (DSM) and Multi Domain Models (MDM) the team skill profile is likely to change rapidly.

Designing skill surveys

Sometimes a skill survey is a good way to gather some initial information. This information can be used to seed profiles and avoid the cold start problem that hinders the adoption of so many new applications (this is the main way we use surveys) or to explore open issues in a community (we do this too). A survey by itself is not enough to give real insights, but it can be one step on the path.

We try to apply design thinking to survey design. We start by working to understand the people who will be taking the survey. We explore why they might be willing to take it, what fears the survey may stir up, and how the survey can return value to the people taking it. In other words, we begin with empathy.

We then explore and test a number of different survey designs. We tend to use SurveyMonkey, though we are exploring other tools, and at some point, in the near future most surveys will be delivered through chatbots (robot applications capable of simple, scripted conversations). These bots will be as likely to pop up in Slack and Microsoft Teams as in conventional survey form.

Finally, we look hard at the value of the survey to the person taking it. This value can surface in several different ways. Taking a well-designed skill survey should help a person reflect on their own skills and how they use these skills with other people. Reflection is something that most of us could spend more time on.

The survey should help participants discover their own potential, and on completing it they should feel celebrated and not judged.

Finally, if you ask someone to take a survey you should have the grace to offer them some form of feedback. This can take many forms. One of the best is that on completing the survey one gets immediate feedback and benchmarking of your responses. Another best practice is to offer people a report that summarizes the results of the survey and shares insights. If you ask someone to take a survey make sure to share something as a result.

Some simple rules for survey design

Start by making an emotional connection. Ask something simple that inspires the imagination or gets people in contact with their feelings and ideas.

Respect people’s time, keep the survey to less than ten questions if possible, certainly less than twenty.

Mix it up. Use a variety of different question styles.

Include some, but not too many, open questions.

Where possible, include ‘other’ as a response and let people tell you what you are missing.

Build-in opportunities for reflection.

Provide feedback.

(The following are specific to skill surveys.)Connect skills to other things. Don’t just ask about skills, but ask what skills are used with other people or roles and what skills are used for specific processes or tasks.

Leave room for people to suggest new skills. A good skill survey is not just a list of skills. It is a place for discovery.

Include core skills (skills essential to current work), as well as aspirational or target skills. Skill surveys are as much about the future as the present.

Some open surveys

At Ibbaka, we do like surveys, and we normally have several open. Here are some that are open.

These surveys could be improved. So don’t just take the surveys, let us know how we can improve them by sending a message to info@ibbaka.com