The Ethics of Pricing AI

Steven Forth is a Managing Partner at Ibbaka. See his Skill Profile on Ibbaka Talio.

AI will find many uses in B2B pricing over the next few years. They will be used to build out value models, infer pricing models from these value models, set prices, configure complex solutions for value optimization (value optimization coming before price optimization), and so on. AI will also find applications in pricing research. Generative AIs like Open.ai’s GPT or Google’s Bard will be used to answer pricing research questions and will be leveraged to improve the design of pricing research.

Many of us have already taken the results of pricing research, whether this be market studies, financial and usage data, or conjoint analysis, and fed it into an LLM (Large Language Model) to get help in interpreting the data.

The next step is to augment and tune an LLM, perhaps an open-source one like LLaMA from Meta, with pricing data of various types to improve the quality of the conversation and maybe even the causal inference (for those wanting to go deep, this Microsoft Research paper is a fascinating read).

Of course, AI is nothing new to the pricing world. The heavy-metal pricing optimization companies have been using machine learning for many years in their revenue and price optimization engines. One of these criticisms of these systems is a lack of transparency in their pricing recommendations. How far should sales, or a buyer, trust a pricing recommendation from an AI that is a black box and is difficult to understand or even to predict?

Pricing AIs are not immune to the questions and doubts around ethics and ethical use that have troubled other uses of AI.

Earlier in May, I asked the following on LinkedIn.

“What are the most important ethical questions when it comes to applying AI to pricing?

The rapid advance of AI is raising ethical questions across a range of fields. What are the most important questions for us to address as AI gets rolled out in more and more pricing applications?

Some of the most common issues in other fields are ...

Transparency - How is AI being used, how was the AI trained, what data was used, and how are the results used?

Fairness - Does the applications of the AI lead to a fair result for all parties

involved?

Bias - Is the AI biased, perhaps by the training data or the approach taken to training?

Displacement - Will the use of AI cause people to lose jobs, how will it change the workforce?

Externalities - How will use of AI impact people who are not part of the transaction?”

Here are the answers I got:

Some people took the time to add detailed comments. I want to thank Karan Sood for this contribution.

“?All of the above !

Feels like we don’t have enough information out there for what’s AI’s impact and what do we really intend to do with AI in pricing. Like are we talking about LLM and generative AI ? But that’s not analysis or modelling. That’s just language processing…

So hard to tell. However, just overall these are the issues I have with LLM/AI application right now based on your poll:

1) Transparency: So I agree, it’s a black hole. Our input is used to train new output. So goes without saying don’t feed anything confidential. Samsung learned the hard way.

2) Bias: we know LLM’s are trained to walk a certain line. But they also are insanely easy to manipulate. An example is circulating where ChatGPT refuses to give a list of pirating websites, but then gives in when it’s carefully worded. You can use that use case to go into any social issue rabbit hole.

3) Fairness: From a fairness POV we need to make sure we don’t start to disproportionately start to impact a certain sector or demographic and create a generational gap once again…we are barely crawling out of one gender equity gap in business.

4) Misinformation: ChatGPT 3.5 is still out there making up info and making up links that don’t exist or work.

5) Lack of standards!”

I was one of those who chose ‘transparency’ as an answer. My thinking is that (i) transparency is one way to reduce bias and (ii) transparency is central to fairness in pricing.

Transparency in Pricing and Pricing AIs

Back in March 2021, Ibbaka hosted a conversation between three pricing leaders on pricing transparency. Xiaohe Li, Stella Penso, and Kyle Westra went deep on what pricing transparency means. We summarized their conclusions in the below table.

What seems relevant to the ethics of pricing AI conversation are the two points on the pricing process.

“Pricing methods are understood.”

“Pricing algorithms are explained.”

There is a risk that as more and more B2B pricing is generated by AIs, it will become less and less transparent. We will not know how the price was set and will not be able to explain the algorithms used to set the price.

Another way of saying transparency in the context of AI is explainable AI or xAI. The NIST has proposed four principles for xAI that will be relevant to the use of AI in pricing.

Explanation: A system delivers or contains accompanying evidence or reason(s) for outputs and/or processes.

Meaningful: A system provides explanations that are understandable to the intended consumer(s).

Explanation Accuracy: An explanation correctly reflects the reason for generating the output and/or accurately reflects the system’s process.

Knowledge Limits: A system only operates under the conditions for which it was designed and when it reaches sufficient confidence in its output.

All four of these principles can and should be applied to pricing AIs.

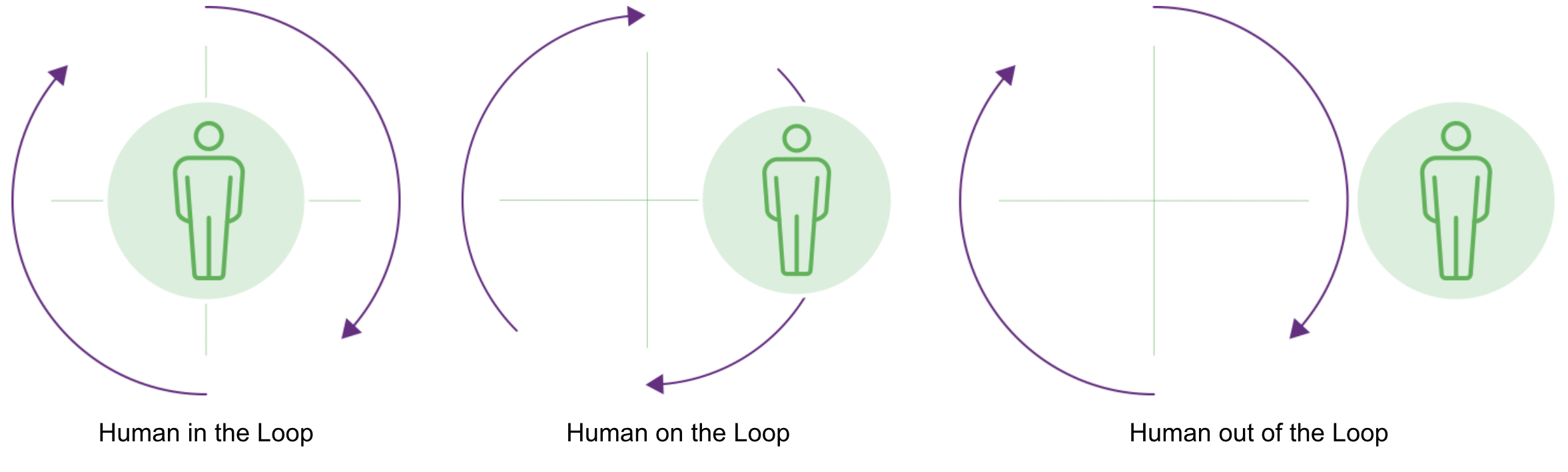

Another perspective on the ethics of pricing AIs comes from the (frightening) world of autonomous weapons. Here the constructs of human-in-the-loop, on-the-loop, and outside-of-the-loop are used to define levels of human control. and involvement.

human-in-the-loop: a human must instigate the action of the weapon (in other words not fully autonomous)

human-on-the-loop: a human may abort an action

human-out-of-the-loop: no human action is involved

These approaches are relevant to pricing. Most current systems are either human-in-the-loop or human-on-the-loop, which gives the human doing the pricing a level of control, and with control accountability. This is not always the case though. Automated trading systems, for example, are human-out-of-the-loop in many cases, and move too fast for human intervention. As we move to more and more M2M (Machine to Machine) business models we are going to see more use of pricing AIs with humans out of the loop. This should give us pause.

Pricing leaders need to be leading the discussion of pricing transparency and control. If we fail to do this we are inviting accusations that we are poor stewards of the pricing discipline and that we have lost control over and understanding of the fundamentals of our discipline.

Pricing Fairness and Pricing AIs

At the end of the day, the goal of pricing transparency and AI is pricing fairness. The reason we are concerned with bias (which drew 26% of the responses in the poll) is that it puts fairness in question. AIs developed using machine learning are notorious for being biased because of the data sets used to train them or to tag the data (in supervised learning). But what do we mean when we say ‘fairness in the context of pricing?’.

Back in 2018, Ibbaka proposed 3 principles of pricing fairness that we use in working with our clients.

Is it clear how we set prices? Can we explain this to ourselves? Can we explain this to our customers? Is our website clear on this? Can sales communicate it?

Is our pricing consistent? Do we treat similar customers in the same way? Is our discounting policy clear and consistent?

Are we creating differentiated value? Do we use this to set prices? Do we adjust prices based on the value we are creating? Are we sharing that value with our customers?

It seems to me that these can be connected to fairness in pricing AIs. The first principle speaks to the need for transparency. Without transparency, we will struggle to have ethical pricing AIs.

Principle two needs to be strengthened, for as stated it is not strong enough to deal with the bias risk in AI. We are still working on how to reframe this principle. Here is an attempt.

“Is our pricing unbiased?” Do we treat all of our customers in a way that respects their backgrounds and needs and does not take advantage of their situation or weakness?

Hmm, that does not seem quite right. We will continue to work on this and more importantly, make them part of our work and part of our AI development.

The third principle, concerning differentiated value and how value is shared, still seems sound. We should be able to develop pricing AIs that help us get better at understanding value and then working through the full cycle of creating, communicating, delivering, documenting, and then capturing part of that value back in price.